Trends in Data Server Tech

The world of data server technology is rapidly evolving, with new trends and advancements emerging every day. Whether you are a business owner, IT professional, or simply interested in the field, staying up-to-date with the latest trends is crucial. In this blog article, we will explore the current and future trends in data server technology, providing you with a detailed and comprehensive guide.

As data continues to grow exponentially, the demand for more efficient and scalable data server solutions is on the rise. From the adoption of cloud-based servers to the emergence of edge computing, the landscape of data server technology is undergoing a significant transformation. Understanding these trends and their implications can help businesses make informed decisions and stay ahead in this rapidly changing digital era.

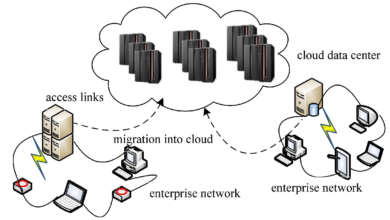

Cloud-based Servers: Revolutionizing Data Storage and Management

Cloud-based servers have revolutionized the way organizations store and manage their data. With the ability to access and store data remotely, cloud-based servers offer numerous benefits, including scalability, cost-effectiveness, and flexibility. In this section, we will delve into the advantages of using cloud-based servers and explore the emerging trends in this space.

The Advantages of Cloud-based Servers

One of the primary advantages of cloud-based servers is their scalability. Unlike traditional physical servers, cloud-based servers allow businesses to quickly scale their infrastructure to accommodate changing data storage needs. Whether you need to increase or decrease your storage capacity, cloud-based servers offer the flexibility to do so with ease.

Another advantage of cloud-based servers is their cost-effectiveness. With cloud-based servers, businesses can avoid the upfront costs associated with purchasing and maintaining physical hardware. Instead, they can pay for the resources they use on a subscription basis, making it a more affordable option for many organizations.

Flexibility is also a key advantage of cloud-based servers. With the ability to access data from anywhere, employees can work remotely and collaborate seamlessly. This flexibility allows businesses to adapt to changing work environments and enables employees to be more productive.

Emerging Trends in Cloud-based Servers

Cloud-based servers continue to evolve, and several emerging trends are shaping their future. One such trend is the rise of serverless computing. Serverless computing allows businesses to focus on writing code and deploying applications without the need to manage infrastructure. With this trend, organizations can reduce costs and improve scalability by only paying for the resources used when executing code.

Another trend in cloud-based servers is the adoption of multi-cloud and hybrid cloud solutions. As organizations look for more flexibility and redundancy, they are increasingly leveraging multiple cloud providers or combining public and private clouds. This trend allows businesses to choose the best services from different providers and optimize their infrastructure for performance and cost-efficiency.

Additionally, cloud-based servers are becoming more intelligent with the integration of artificial intelligence (AI) and machine learning (ML) capabilities. AI and ML technologies can analyze data patterns, automate tasks, and optimize resource allocation in cloud-based servers. This trend enables businesses to improve efficiency and make data-driven decisions in real-time.

Edge Computing: Bringing Data Processing Closer to the Source

Edge computing is gaining momentum as a solution to the challenges posed by the increasing volume and speed of data. By bringing data processing closer to the source, edge computing reduces latency, improves responsiveness, and minimizes bandwidth usage. In this section, we will discuss the concept of edge computing, its advantages, and the trends shaping its future.

The Concept of Edge Computing

Traditionally, data processing has been centralized in large data centers, which can lead to latency issues, especially for applications requiring real-time responses. Edge computing addresses this challenge by moving data processing closer to the source, such as IoT devices or edge servers. This proximity allows for faster data analysis, reduced latency, and improved performance.

Edge computing involves deploying computing resources, including servers, storage, and networking, at the edge of the network, near the data source. This decentralized approach enables data to be processed and analyzed locally, eliminating the need to send all data to a central data center. Only relevant data or insights are transmitted to the cloud or data center for further analysis or storage.

Advantages and Benefits of Edge Computing

There are several advantages and benefits associated with edge computing. One primary advantage is reduced latency. By processing data closer to the source, edge computing can significantly reduce the time it takes for data to travel over the network. This is especially critical for applications that require real-time or near real-time responses, such as autonomous vehicles or industrial automation.

Another benefit of edge computing is improved responsiveness. With data being processed locally, edge devices can quickly respond to events or triggers without relying on a centralized data center. This capability is crucial for applications that require immediate action, such as security systems or predictive maintenance in industrial settings.

Furthermore, edge computing can help reduce bandwidth usage. By processing and analyzing data locally, only relevant information needs to be transmitted to the cloud or data center, reducing the amount of data that needs to be transferred over the network. This can result in significant cost savings, especially in scenarios where data transmission is costly or limited.

Emerging Trends in Edge Computing

As edge computing continues to evolve, several trends are shaping its future. One such trend is the integration of AI and ML capabilities at the edge. By combining edge computing with AI and ML, organizations can harness the power of intelligent decision-making at the edge of the network. This trend enables real-time data analysis and autonomous decision-making in edge devices, without relying on a centralized data center or cloud.

Another trend in edge computing is the convergence of edge and cloud computing. This trend, often referred to as fog computing, aims to bridge the gap between edge devices and the cloud by bringing cloud-like capabilities to the edge. Fog computing allows for distributed data processing, storage, and analytics across a network of edge devices, fog nodes, and cloud resources. This convergence enables more complex and resource-intensive applications to be deployed at the edge, while still leveraging the benefits of the cloud.

Additionally, edge computing is witnessing advancements in security and privacy. As more data is processed and analyzed at the edge, ensuring the security and privacy of that data becomes paramount. This trend involves the development of secure edge devices, encrypted communication protocols, and privacy-preserving techniques for data processing. These advancements aim to address the concerns surrounding data security and privacy in edge computing environments.

Artificial Intelligence and Machine Learning in Data Servers

Artificial intelligence (AI) and machine learning (ML) are revolutionizing data server technology by enabling intelligent data management, analysis, and decision-making. With AI and ML capabilities, data servers can automate tasks, uncover valuable insights, and optimize resource allocation. In this section, we will delve into the role of AI and ML in data servers and their impact on the industry.

The Role of AI and ML in Data Servers

AI and ML technologies have a significant impact on data servers, transforming them into intelligent systems capable of autonomously managing, analyzing, and optimizing data. These technologies can automate repetitive tasks, such as data cleaning and preprocessing, freeing up human resources for more complex analysis and decision-making.

ML algorithms can analyze large datasets to identify patterns, correlations, and anomalies that may not be apparent to human analysts. These insights can help businesses make data-driven decisions, uncover hidden opportunities, and improve operational efficiency. Additionally, ML algorithms can continuously learn from new data, adapting and improving their performance over time.

Applications of AI and ML in Data Servers

AI and ML find applications in various aspects of data server technology. One such application is predictive analytics. ML algorithms can analyze historical data to identify patterns and trends, enabling businesses to make predictions about future events or outcomes. This capability is particularly useful in areas such as demand forecasting, fraud detection, and preventive maintenance.

Another application of AI and ML in data servers is anomaly detection. ML algorithms can learn the normal behavior of a system or dataset and identify any deviations from that norm. This can help detect and mitigate security breaches, system failures, or other abnormal events that may impact the performance or security of data servers.

Furthermore, AI and ML can optimize resource allocation in data servers. By analyzing historical usage patterns and workload characteristics, ML algorithms can dynamically allocate computing resources, such as CPU, memory, and storage, to ensure optimal performance and cost-efficiency. This capability is especially valuable in cloud-based server environments, where resource utilization can vary significantly over time.

Data Security: Protecting Data in an Increasingly Vulnerable Landscape

With the growing threat of cyberattacks and data breaches, data security has become a top priority for businesses. Ensuring the confidentiality, integrity, and availability of data is critical to maintaining customer trust and complying with data protection regulations. In this section, we will explore the latest trends and technologies in data server security, including encryption, authentication, and intrusion detection systems.

Data Encryption: Safeguarding Data at Rest and in Transit

Data encryption is a fundamental component of data server security. Encryption techniques transform data into an unreadable format, making it unintelligible to unauthorized individuals. Encryption can be applied to data at rest, stored on disks or databases, as well as data in transit, transmitted over networks.

One trend in data encryption is the adoption of stronger and more efficient encryption algorithms. As computing power increases, encryption algorithms need to keep pace to withstand brute-forceattacks. Advanced encryption algorithms, such as AES (Advanced Encryption Standard), provide a higher level of security compared to older algorithms. Additionally, the use of encryption keys, both symmetric and asymmetric, plays a crucial role in ensuring the confidentiality of encrypted data.

Another trend in data encryption is the widespread adoption of encryption for data in transit. With the increase in data transmission over networks, securing data as it travels between servers, clients, and other endpoints is essential. Transport Layer Security (TLS) protocols, such as SSL (Secure Sockets Layer) and its successor TLS, provide secure communication channels by encrypting data during transmission. The constant evolution of these protocols ensures better security and protection against vulnerabilities.

Authentication and Access Control: Verifying Identities and Granting Permissions

Authentication and access control mechanisms play a vital role in protecting data servers from unauthorized access. Robust authentication methods, such as username-password combinations, multi-factor authentication, and biometric authentication, help verify users’ identities before granting access to sensitive data.

One trend in authentication is the adoption of biometric authentication methods. Biometric data, such as fingerprints, facial recognition, or iris scans, offer a higher level of security compared to traditional password-based authentication. Biometric data is unique to individuals, making it difficult for unauthorized individuals to gain access to data servers.

Access control is another critical aspect of data server security. Role-based access control (RBAC) and attribute-based access control (ABAC) are commonly used approaches to grant or restrict access to specific resources based on users’ roles or attributes. The trend in access control is towards more granular and dynamic control, allowing organizations to define fine-grained permissions based on contextual information, such as time, location, or device.

Intrusion Detection and Prevention Systems: Detecting and Mitigating Attacks

Intrusion detection and prevention systems (IDPS) are essential components of data server security, helping organizations detect and respond to potential threats or attacks. These systems monitor network traffic, system logs, and other data sources to identify suspicious activities or patterns indicating a security breach.

The trend in IDPS is towards the use of advanced machine learning algorithms and artificial intelligence techniques for more accurate and proactive threat detection. ML algorithms can analyze large volumes of data, including network traffic patterns, system logs, and user behavior, to identify anomalous activities that may indicate a security breach. These algorithms can continuously learn from new data, improving their detection capabilities over time.

Additionally, IDPS systems are evolving to provide real-time response capabilities, enabling organizations to automatically block or mitigate threats as they occur. This trend involves the integration of IDPS with other security components, such as firewalls, intrusion prevention systems (IPS), and security information and event management (SIEM) systems, to create a comprehensive security ecosystem.

Green Data Centers: Sustainable Solutions for a Greener Future

As concerns over environmental sustainability grow, the demand for energy-efficient data centers is on the rise. Traditional data centers consume significant amounts of energy for cooling, power supply, and equipment operation. In this section, we will discuss the trends in green data center technologies, such as renewable energy integration, cooling optimization, and resource recycling.

Renewable Energy Integration: Powering Data Centers with Green Energy

One trend in green data center technologies is the integration of renewable energy sources to power data center operations. As the cost of renewable energy technologies, such as solar and wind, continues to decrease, more data centers are adopting these sources to reduce their carbon footprint. Renewable energy integration not only helps data centers become more sustainable but also provides cost savings in the long run by reducing reliance on traditional energy sources.

Another trend in renewable energy integration is the use of energy storage systems. Energy storage technologies, such as batteries or flywheels, can store excess energy generated by renewable sources during periods of low demand. This stored energy can then be used to power data center operations during peak demand periods or when renewable energy generation is limited. Energy storage systems help data centers become more independent from the grid and ensure a continuous and reliable power supply.

Cooling Optimization: Efficiently Managing Data Center Heat

Cooling is a significant contributor to the energy consumption of data centers. To reduce energy usage and improve efficiency, data centers are adopting various cooling optimization techniques. One trend is the use of advanced cooling systems, such as liquid cooling or direct-to-chip cooling. These systems provide more efficient heat dissipation, reducing the energy required for cooling compared to traditional air-based cooling methods.

Furthermore, data centers are leveraging techniques like computational fluid dynamics (CFD) simulations and airflow management to optimize cooling efficiency. CFD simulations help identify hotspots and airflow patterns within data centers, allowing for targeted cooling solutions. Proper airflow management, including the use of hot and cold aisles, containment systems, and optimized ventilation, ensures efficient cooling and reduces energy waste.

Resource Recycling: Minimizing Waste and Maximizing Efficiency

Data centers generate a significant amount of electronic waste, including outdated servers, networking equipment, and storage devices. To minimize waste and maximize efficiency, data centers are adopting resource recycling practices. This trend involves the responsible disposal or repurposing of outdated equipment, ensuring that valuable resources are not wasted.

Ngadsen test2

One approach to resource recycling is refurbishing or reselling decommissioned equipment that is still functional. This not only reduces waste but also provides an opportunity for other organizations to benefit from affordable, refurbished equipment. Additionally, data centers are exploring recycling programs to recover valuable materials from end-of-life equipment, such as precious metals, plastics, and rare earth elements.

Another aspect of resource recycling is the use of circular economy principles in data center design and operations. This trend involves designing data centers with modularity and scalability in mind, allowing for easy upgrades or replacements of components. By adopting a circular economy approach, data centers can extend the lifespan of their infrastructure, reduce waste generation, and improve resource efficiency.

Virtualization: Optimizing Resource Utilization and Flexibility

Virtualization technology enables the efficient utilization of server resources and provides flexibility in deploying and managing data servers. By abstracting physical hardware and creating virtual instances, virtualization allows multiple operating systems and applications to run on a single physical server. In this section, we will explore the latest trends in virtualization, including containerization and software-defined infrastructure.

Containerization: Lightweight and Portable Virtualization

Containerization is a trend in virtualization that offers lightweight and portable virtualization solutions. Unlike traditional virtual machines (VMs), which require a full operating system for each instance, containers share the host operating system while providing isolated environments for running applications. This lightweight approach allows for faster deployment, efficient resource utilization, and easy scalability.

Containerization technologies, such as Docker and Kubernetes, have gained popularity due to their ability to simplify application deployment and management. Containers can be easily replicated, moved, or scaled horizontally, enabling organizations to quickly respond to changing demands. Additionally, containerization promotes DevOps practices by facilitating collaboration between development and operations teams, leading to faster application development and deployment cycles.

Software-Defined Infrastructure: Flexibility and Automation

Software-defined infrastructure (SDI) is a trend that leverages virtualization to abstract and automate the management of various data center components, including networking, storage, and security. SDI allows organizations to provision, configure, and manage infrastructure resources through software interfaces, reducing manual intervention and improving agility.

One aspect of SDI is software-defined networking (SDN), which separates the control plane from the data plane in network infrastructure. SDN allows for programmable and centralized control of network traffic, enabling dynamic network configuration and easier network management. This flexibility and automation simplify network provisioning, improve scalability, and facilitate network virtualization.

Another component of SDI is software-defined storage (SDS), which abstracts the physical storage infrastructure and provides a unified software layer for managing storage resources. SDS enables organizations to pool and allocate storage capacity based on application requirements, simplifying storage management and optimizing resource utilization. Additionally, SDS solutions often offer advanced features such as data deduplication, compression, and replication for improved efficiency and data protection.

Big Data Analytics: Unleashing the Power of Data Insights

Big data analytics allows businesses to extract valuable insights from massive datasets, enabling better decision-making and strategic planning. With the increasing volume, variety, and velocity of data, big data analytics is becoming essential in data server technology. In this section, we will discuss the trends and advancements in big data analytics within the context of data server technology.

Real-time Analytics: Immediate Insights for Actionable Intelligence

The trend in big data analytics is towards real-time analytics, where insights are generated and acted upon in near real-time. Traditional batch processing of data can lead to delays in extracting insights, which may limit the ability to respond quickly to changing conditions or emerging opportunities. Real-time analytics enables organizations to make informed decisions and take immediate actions based on up-to-date data.

Real-time analytics involves processing data streams as they arrive, often using technologies such as stream processing frameworks or in-memory databases. These technologies allow for continuous analysis of data, enabling organizations to detect patterns, anomalies, or trends as they occur. Real-time analytics finds applications in various domains, such as financial services, IoT, and online retail, where timely insights are crucial for competitive advantage.

Advanced Analytics Techniques: From Descriptive to Predictive and Prescriptive

In addition to real-time analytics, advanced analytics techniques are shaping the future of big data analytics. While descriptive analytics focuses on summarizing historical data, predictive and prescriptive analyticstake analytics to the next level by providing insights into future outcomes and prescribing actions to optimize results.

Predictive analytics utilizes statistical models and machine learning algorithms to forecast future trends or events based on historical data. By identifying patterns and correlations, predictive analytics can help businesses make informed predictions about customer behavior, market trends, or equipment failure, among other things. These predictions enable organizations to proactively address potential issues or opportunities.

Prescriptive analytics, on the other hand, goes beyond predictions and provides recommendations for decision-making. By considering various constraints and objectives, prescriptive analytics algorithms can suggest optimal actions or strategies to achieve desired outcomes. This can range from optimizing supply chain operations to personalized recommendations for customers based on their preferences and behaviors.

Big Data Processing Frameworks: Scalability and Distributed Computing

Processing and analyzing large volumes of data require scalable and distributed computing frameworks. Big data processing frameworks, such as Apache Hadoop and Apache Spark, provide the infrastructure and tools to handle massive datasets across clusters of servers. These frameworks enable parallel processing, fault tolerance, and distributed storage, making it possible to process data at scale.

One trend in big data processing frameworks is the adoption of cloud-based solutions. Cloud platforms, such as Amazon Web Services (AWS) and Google Cloud Platform (GCP), offer managed big data services that eliminate the need for organizations to manage their own infrastructure. These services provide scalability, cost-effectiveness, and ease of use, allowing businesses to focus on data analysis rather than infrastructure management.

Furthermore, the integration of big data processing frameworks with AI and ML technologies is an emerging trend. This integration allows for advanced analytics, such as natural language processing, image recognition, or anomaly detection, on large-scale datasets. By combining big data processing with AI and ML, organizations can uncover valuable insights and drive innovation in various domains, including healthcare, finance, and marketing.

Internet of Things (IoT): Connecting and Managing a World of Devices

The proliferation of IoT devices has created challenges in managing and processing the vast amount of data they generate. Data servers play a crucial role in handling IoT data, ensuring its storage, processing, and analysis. In this section, we will explore the trends in IoT data server technology, including edge computing for IoT, data integration, and real-time analytics.

Edge Computing for IoT: Bringing Intelligence Closer to Devices

One trend in IoT data server technology is the adoption of edge computing to handle the massive amounts of data generated by IoT devices. Edge computing brings data processing closer to the devices themselves, reducing latency, bandwidth usage, and dependence on centralized data centers or the cloud. By analyzing data at the edge, organizations can extract real-time insights, make immediate decisions, and take actions without relying on a remote server or network connection.

Edge computing for IoT involves deploying edge servers or gateways that process and filter data locally before transmitting it to the cloud or data center. These edge devices can perform data preprocessing, aggregation, or even running AI and ML models to extract meaningful insights. This trend enables businesses to leverage the full potential of IoT data, especially in scenarios where real-time or near-real-time responses are critical.

Data Integration: Combining IoT Data with Enterprise Systems

Integrating IoT data with existing enterprise systems is another trend in IoT data server technology. IoT devices generate vast amounts of data that need to be integrated with other data sources, such as customer data, supply chain data, or financial data, to gain a comprehensive view of operations and enable data-driven decision-making. Data servers play a crucial role in managing and integrating this diverse and disparate data.

One approach to data integration is the use of data integration platforms or middleware that enable seamless connectivity and interoperability between IoT devices and enterprise systems. These platforms provide data transformation, cleansing, and mapping capabilities, ensuring data consistency and compatibility across different systems. The trend is towards more automated and streamlined data integration processes, reducing manual effort and enabling real-time data synchronization.

Real-time Analytics for IoT: Extracting Insights on the Fly

Real-time analytics is essential for extracting insights from IoT data streams and enabling immediate actions or responses. With the high velocity and volume of IoT data, traditional batch processing approaches may not be suitable. Real-time analytics allows organizations to analyze data as it arrives, detecting anomalies, identifying patterns, or triggering alerts in real-time.

Real-time analytics for IoT involves the use of stream processing frameworks, complex event processing (CEP) engines, or in-memory databases that can handle high-speed data streams. These technologies enable organizations to analyze data on the fly, providing timely insights for proactive decision-making. Real-time analytics finds applications in various IoT use cases, such as predictive maintenance, remote monitoring, or smart city management.

Hybrid Cloud Solutions: Bridging the Gap Between Public and Private Clouds

Hybrid cloud solutions offer a combination of public and private clouds, providing businesses with increased flexibility and security. By leveraging both public and private cloud environments, organizations can optimize their infrastructure, achieve scalability, and meet specific compliance or security requirements. In this section, we will discuss the trends and benefits of hybrid cloud solutions in the context of data server technology.

Flexibility and Scalability: Tailoring the Cloud Infrastructure

One of the key benefits of hybrid cloud solutions is the flexibility it offers to organizations. With a hybrid cloud approach, businesses can choose where to deploy their applications and data based on specific requirements. This flexibility allows organizations to leverage the scalability and agility of the public cloud for certain workloads or applications while keeping sensitive or critical data on private cloud infrastructure.

The trend in hybrid cloud solutions is towards more seamless integration and management of public and private cloud resources. Organizations are adopting cloud management platforms or tools that enable unified management and orchestration across different cloud environments. This trend simplifies the management of hybrid cloud infrastructure, enhances visibility and control, and ensures optimal resource utilization.

Security and Compliance: Meeting Specific Requirements

Hybrid cloud solutions address security concerns and compliance requirements that organizations may have. By keeping sensitive or regulated data on a private cloud, organizations can maintain tighter control over their data and ensure compliance with industry-specific regulations. At the same time, organizations can leverage the security measures provided by reputable public cloud providers for non-sensitive workloads or applications.

The trend in hybrid cloud security is the integration of security measures, such as encryption, access controls, and threat detection, across both public and private cloud environments. This integration ensures consistent security policies and enforcement, regardless of where data resides. Additionally, organizations are adopting cloud-native security solutions that provide advanced threat intelligence and protection mechanisms specifically designed for hybrid cloud environments.

Quantum Computing: Unlocking New Possibilities in Data Processing

Quantum computing holds the promise of revolutionizing data processing by leveraging quantum bits (qubits) instead of traditional binary bits. While still in its early stages, quantum computing has the potential to solve complex problems at an unprecedented speed and scale. In this section, we will explore the trends and potential applications of quantum computing in data server technology.

Quantum Supremacy: Pushing the Limits of Computation

One of the significant trends in quantum computing is the pursuit of quantum supremacy. Quantum supremacy refers to the point where a quantum computer can solve a problem that is beyond the reach of classical computers. It signifies a breakthrough in computation power and capabilities, opening doors to solving complex problems in various domains, including cryptography, optimization, and material science.

Researchers and organizations are working towards building quantum computers with a sufficient number of qubits and low error rates to achieve quantum supremacy. This trend involves advancements in qubit technologies, error correction techniques, and quantum algorithms. While quantum supremacy has not yet been definitively demonstrated, the progress in this field is accelerating, and the potential for transformative applications is vast.

Potential Applications of Quantum Computing in Data Servers

Quantum computing has the potential to impact data server technology in several ways. One potential application is in the field of cryptography. Quantum computers can break existing encryption algorithms that rely on the difficulty of factoring large numbers. However, quantum-resistant encryption algorithms are being developed to address this challenge, ensuring data security even in the presence of quantum computers.

Another potential application is in optimization problems. Many real-world problems, such as resource allocation, scheduling, or route optimization, are computationally expensive and time-consuming for classical computers. Quantum computing can provide more efficient solutions to these optimization problems, leading to improved resource utilization, cost savings, and better decision-making.

Furthermore, quantum computing can have implications for data analysis and machine learning. Quantum machine learning algorithms can leverage the computational power of quantum computers to process and analyze large datasets more efficiently. This can lead to advancements in data analytics, pattern recognition, and predictive modeling, enabling organizations to extract valuable insights from complex data.

In conclusion, the world of data server technology is constantly evolving, driven by the need for more efficient, scalable, secure, and sustainable solutions. From cloud-based servers to edge computing, AI and ML to quantum computing, staying informed about these trends is crucial for businesses and individuals alike. By understanding and embracing these advancements, organizations can harness the power of data to drive innovation, make informed decisions, and stay ahead in an increasingly digital and data-driven world.

Disclaimer: The information provided in this article is based on industry trends and research. It is important to conduct further research and consult with experts before making any decisions or investments based on the content of this article.