Challenges for Data Servers

In today’s digital age, data servers play a crucial role in storing, processing, and managing vast amounts of information. However, these powerful machines face their fair share of challenges that can hinder their performance and efficiency. In this blog article, we will delve into the various obstacles that data servers encounter and explore the strategies employed to overcome them.

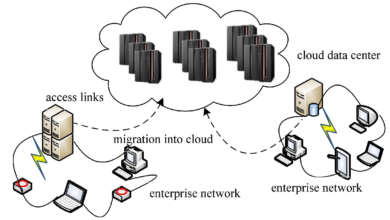

The first challenge that data servers face is scalability. As the amount of data generated continues to grow exponentially, servers must be able to handle increasing workloads without compromising speed or stability. Scalability is crucial to ensure that data servers can expand their resources as needed, without experiencing performance bottlenecks or system failures. One solution to address this challenge is the use of cloud computing. Cloud-based data servers provide the flexibility to scale resources up or down based on demand, enabling organizations to handle fluctuating workloads efficiently. Additionally, virtualization technology allows for the efficient utilization of server resources, enabling multiple virtual servers to run on a single physical server, further enhancing scalability.

Scalable Infrastructure: Ensuring Seamless Growth

Building a scalable infrastructure is essential for overcoming the challenges associated with data server scalability. By designing a system that can easily accommodate additional servers, storage, and processing power, organizations can ensure that their data servers can adapt to the increasing demands of data processing. One approach is to employ a modular design, where new server units can be added as needed, allowing for seamless expansion. Additionally, implementing load balancing mechanisms can distribute workloads evenly across multiple servers, preventing any single server from becoming overwhelmed. This approach optimizes resource utilization and ensures that no individual server becomes a performance bottleneck.

Cloud Computing: Harnessing the Power of Scalability

Cloud computing has revolutionized the scalability of data servers. By leveraging cloud-based infrastructure, organizations can offload the burden of scaling hardware and resources to service providers. Cloud platforms such as Amazon Web Services (AWS) and Microsoft Azure offer a wide range of scalable services, including virtual servers, storage, and databases. These services can be easily provisioned and scaled up or down based on demand, allowing organizations to match their resources to the workload effectively. Additionally, cloud services offer the advantage of pay-as-you-go pricing models, enabling businesses to optimize costs by only paying for the resources they require.

Virtualization: Efficient Resource Utilization

Virtualization technology plays a vital role in achieving scalability for data servers. By abstracting physical server resources into virtual machines (VMs), organizations can maximize the utilization of hardware and effectively scale their infrastructure. Virtualization allows multiple VMs to run on a single physical server, enabling efficient resource allocation and cost savings. Additionally, VMs can be easily migrated between physical servers, allowing for seamless scalability without disrupting ongoing operations. Virtualization also provides the flexibility to allocate resources dynamically, allowing organizations to respond to changing demands in real-time and ensure optimal performance.

Another significant challenge faced by data servers is data security. With cyber threats becoming increasingly sophisticated, protecting sensitive information stored on data servers has become paramount. Data breaches can have severe consequences, including financial losses, reputational damage, and legal implications. Therefore, data servers must employ robust security measures to safeguard data integrity and confidentiality.

Data Encryption: Protecting Sensitive Information

One of the most crucial aspects of data security is encryption. By encrypting data at rest and in transit, organizations can ensure that even if unauthorized individuals gain access to the data, it remains unreadable and unusable. Encryption algorithms such as Advanced Encryption Standard (AES) provide strong protection against data breaches. Implementing encryption mechanisms at the server level adds an additional layer of security, making it harder for attackers to compromise sensitive information. Additionally, organizations must ensure that encryption keys are properly managed and protected to prevent unauthorized access.

Intrusion Detection Systems: Detecting and Preventing Attacks

Intrusion Detection Systems (IDS) play a critical role in identifying and mitigating potential attacks on data servers. IDS monitors network traffic, analyzing patterns and behaviors to detect any suspicious activity. By employing a combination of signature-based and anomaly-based detection techniques, IDS can identify known attack patterns as well as previously unseen threats. Once an intrusion is detected, IDS can trigger preventive measures such as blocking suspicious IP addresses or generating alerts for further investigation. Continuous monitoring and timely response are essential to ensuring the security of data servers.

Access Control Measures: Limiting Unauthorized Access

Controlling access to data servers is crucial in preventing unauthorized individuals from compromising sensitive information. Implementing strong authentication mechanisms such as multi-factor authentication (MFA) ensures that only authorized personnel can access the servers. Additionally, organizations should implement strict access control policies, granting privileges on a need-to-know basis. Role-based access control (RBAC) can be employed to assign specific roles and permissions to users, limiting their access to only what is necessary for their job responsibilities. Regular audits of user access and permissions help identify any anomalies or unauthorized access attempts.

Physical Security: Protecting the Server Environment

While much emphasis is placed on digital security, physical security is equally important for data servers. Physical access to servers must be restricted to authorized personnel only, and security measures such as surveillance cameras, access control systems, and alarms should be in place to protect server facilities. Additionally, secure data centers should have redundant power supplies, environmental controls, and fire suppression systems to prevent any disruptions or damage to the servers. Physical security measures are essential to ensure the integrity and availability of data servers.

Power and Cooling Management: Ensuring Optimal Performance

Data servers generate a significant amount of heat, and inadequate cooling can lead to system failures and reduced performance. Proper power and cooling management are crucial for maintaining optimal server operation and preventing overheating. Some strategies for effective power management include employing energy-efficient hardware, implementing power monitoring and optimization tools, and utilizing intelligent power distribution units (PDUs) to regulate power consumption. For cooling, organizations can use technologies such as liquid cooling or hot aisle/cold aisle containment to efficiently dissipate heat. Implementing temperature and humidity monitoring systems can also help ensure that the server environment remains within the recommended operating conditions.

Energy-Efficient Hardware: Reducing Power Consumption

Energy efficiency is a key consideration for data servers, as they consume substantial amounts of power. Upgrading to energy-efficient hardware components, such as processors, memory modules, and power supplies, can significantly reduce power consumption. The use of solid-state drives (SSDs) instead of traditional hard disk drives (HDDs) can also contribute to energy savings. Additionally, organizations can employ power management techniques such as dynamic frequency scaling, which adjusts the CPU clock speed based on workload, optimizing power consumption without sacrificing performance. By adopting energy-efficient hardware and implementing power management strategies, organizations can minimize their environmental impact and reduce operational costs.

Smart Power Distribution Units: Optimizing Power Consumption

Smart power distribution units (PDUs) offer advanced power monitoring and control capabilities, enabling organizations to optimize power consumption in data servers. These intelligent PDUs allow for remote monitoring of power usage at the individual server or rack level. By identifying power-hungry servers or equipment, organizations can take corrective actions, such as load balancing or power capping, to ensure efficient resource allocation and minimize wastage. Additionally, smart PDUs can provide real-time alerts and notifications, allowing administrators to respond promptly to any power-related issues or abnormalities, further enhancing power management efficiency.

Liquid Cooling: Efficient Heat Dissipation

Traditional air cooling methods may not be sufficient to handle the heat generated by data servers, especially in densely packed server rooms or data centers. Liquid cooling offers a more efficient alternative, dissipating heat more effectively. Liquid cooling systems utilize coolants or heat transfer fluids to absorb the heat generated by the servers and carry it away from the critical components. This significantly reduces the reliance on air conditioning, resulting in lower energy consumption and better overall cooling efficiency. Liquid cooling solutions can be implemented at various levels, including direct-to-chip cooling, immersion cooling, or liquid-cooled racks, depending on the specific requirements and infrastructure of the data server environment.

Storage and Retrieval Speed: Enhancing User Experiences

Efficient storage and retrieval of data are crucial for providing seamless user experiences. Slow data access can lead to frustrated users and hamper productivity. It is essential to address the challenges related to data access latency and ensure that data servers can quickly retrieve and deliver information to end-users.

Solid-State Drives: Speeding Up Data Access

Traditional hard disk drives (HDDs) have mechanical components that limit their read and write speeds. Solid-state drives (SSDs), on the other hand, use flash memory technology, providing significantly faster data access times. By replacing HDDs with SSDs, organizations can dramatically improve the performance of data servers, reducing data retrieval latency and increasing overall system responsiveness. SSDs also offer better resistance to shock and vibration, making them more reliable in environments where physical shocks may occur. However, it is important to consider the cost and storage capacity trade-offs when deciding on the appropriate mix of SSDs and HDDs for the specific data server requirements.

Caching Mechanisms: Accelerating Data Retrieval

Caching mechanisms play a vital role in accelerating data retrieval from data servers. Caching involves storing frequently accessed data closer to the users or applications, reducing the need to retrieve it from the underlying storage systems. By utilizing caching techniques, organizations can significantly improve data access speeds and minimize latency. Content Delivery Networks(CDNs) are an example of caching mechanisms that distribute cached data across multiple servers geographically. This enables faster access to data by routing requests to the nearest server location, reducing latency and improving overall performance. Additionally, caching can be implemented at the application level, where frequently accessed data is stored in memory for quicker retrieval. This approach, known as in-memory caching, can significantly boost data server performance and enhance user experiences.

Content Delivery Networks: Optimizing Data Delivery

Content Delivery Networks (CDNs) are widely used to improve data server performance and optimize data delivery to end-users. CDNs utilize a distributed network of servers strategically located in various geographic regions. When a user requests data, the CDN intelligently routes the request to the server closest to the user, reducing the distance the data needs to travel and minimizing latency. CDNs also cache static content like images, videos, and JavaScript files, making them readily available to users without the need to retrieve them from the origin server. By leveraging CDNs, organizations can ensure faster data delivery, improved scalability, and enhanced user experiences.

Data Backup and Disaster Recovery: Ensuring Data Resilience

Data loss can have devastating consequences for businesses, ranging from financial losses to reputational damage. Therefore, data servers must have robust backup and disaster recovery strategies in place to ensure data resilience and minimize downtime in the event of a disaster or system failure.

Redundant Storage Systems: Protecting Against Data Loss

Redundant storage systems play a critical role in safeguarding data against failures. By implementing redundant storage architectures such as RAID (Redundant Array of Independent Disks), organizations can ensure that data is replicated across multiple drives, providing data redundancy and protection against drive failures. RAID configurations can be customized based on the specific requirements for fault tolerance, performance, and capacity. Additionally, organizations can employ technologies like erasure coding, where data is divided into fragments and distributed across multiple storage nodes. This approach not only provides redundancy but also enables data reconstruction even if some storage nodes become unavailable.

Off-Site Backups: Protecting Against Disasters

While redundant storage systems protect against drive failures, they may not be sufficient in the case of catastrophic events such as fires, floods, or earthquakes. Off-site backups are crucial for ensuring data recovery in such scenarios. Organizations can periodically replicate and store data backups in secure off-site locations, either physically or through remote data replication. Cloud-based backup services also provide an additional layer of protection by storing backups in geographically dispersed data centers. By maintaining off-site backups, organizations can recover data quickly and resume operations in the event of a disaster.

Automated Recovery Processes: Minimizing Downtime

In the event of a system failure or disaster, minimizing downtime is crucial for business continuity. Automated recovery processes can help organizations quickly restore data server functionality and minimize the impact of disruptions. Automated backup systems can schedule regular backups, ensuring that data is continuously backed up without manual intervention. Additionally, organizations can implement disaster recovery plans that outline step-by-step procedures for recovering data servers and restoring services. These plans should include predefined roles and responsibilities, communication protocols, and testing mechanisms to ensure the effectiveness of the recovery processes.

Network Congestion and Bandwidth Limitations: Ensuring Smooth Data Transmission

Data servers rely on networks to transmit data to end-users, and network congestion or limited bandwidth can significantly impact performance. To ensure smooth data transmission and minimize latency, organizations must address the challenges posed by network congestion and bandwidth limitations.

Load Balancing: Distributing Workload Efficiently

Load balancing is a technique used to distribute network traffic evenly across multiple servers, ensuring optimal resource utilization and preventing any single server from becoming overwhelmed. Load balancers act as intermediaries between end-users and data servers, routing requests to the server with the most available capacity. This approach helps alleviate network congestion and ensures that data servers can handle incoming requests efficiently. Load balancing can be implemented at various levels, including application-level load balancing, network-level load balancing, and DNS-based load balancing.

Ngadsen test2

Traffic Shaping: Prioritizing Data Traffic

Traffic shaping involves managing network traffic to prioritize critical data and ensure smooth transmission. By implementing traffic shaping mechanisms, organizations can allocate bandwidth based on priorities, guaranteeing that essential data flows smoothly while less critical traffic is given lower priority. This approach helps mitigate network congestion and ensures that data servers receive sufficient bandwidth to deliver data to end-users without delay. Traffic shaping techniques include Quality of Service (QoS) policies, which prioritize specific types of traffic, and traffic classification mechanisms that identify and prioritize traffic based on application or protocol.

Content Optimization: Minimizing Data Transfer

Content optimization techniques can significantly improve data server performance by reducing the amount of data that needs to be transmitted. Compression algorithms, such as gzip, can compress data before transmission, reducing file sizes and minimizing bandwidth requirements. Additionally, organizations can employ techniques like minification, where unnecessary characters and white spaces are removed from code files, reducing their size and improving load times. Caching mechanisms, as discussed earlier, also play a role in content optimization by storing frequently accessed content closer to end-users, reducing the need for data server retrieval.

Virtualization and Resource Allocation: Optimizing Server Utilization

Virtualization allows for the efficient utilization of server resources, enabling multiple virtual servers to run on a single physical server. While virtualization provides numerous benefits, it also presents challenges such as resource contention and performance bottlenecks. Organizations must address these challenges to optimize server utilization and ensure efficient operation of data servers.

Resource Allocation: Balancing Workloads

Resource allocation is a critical aspect of virtualization that involves distributing server resources effectively among virtual machines. By monitoring resource usage and allocating resources based on demand, organizations can prevent resource contention and ensure that each virtual machine receives the necessary resources to operate optimally. Resource allocation techniques include dynamic resource allocation, where resources are adjusted in real-time based on workload, and resource reservation, where specific resources are allocated exclusively to specific virtual machines.

Hypervisor Management: Monitoring and Optimization

Efficient hypervisor management is essential for ensuring the smooth operation of virtualized data servers. Hypervisors are responsible for managing and controlling virtual machines, allocating resources, and facilitating communication between virtual machines and physical hardware. By monitoring hypervisor performance, organizations can identify potential bottlenecks or performance issues and take appropriate measures to optimize resource utilization. Hypervisor management tools provide insights into resource usage, virtual machine performance, and overall system health, enabling administrators to make informed decisions and ensure optimal performance.

Workload Balancing: Distributing Work Effectively

Workload balancing involves distributing workloads evenly across virtual machines to ensure optimal resource utilization and prevent performance degradation. By monitoring resource usage and workload patterns, organizations can identify imbalances and redistribute workloads to achieve better resource utilization. Load balancing mechanisms, as mentioned earlier, can also be employed to balance workloads across multiple physical servers, further enhancing resource optimization. Workload balancing helps prevent resource contention and ensures that each virtual machine operates within its allocated resources, maximizing performance and efficiency.

Data Compliance and Regulatory Requirements: Meeting Standards and Regulations

Data servers must adhere to various compliance and regulatory standards, depending on the industry they serve. Organizations must address the challenges associated with data compliance to ensure that data servers meet the necessary requirements and mitigate legal risks.

Data Encryption: Safeguarding Sensitive Information

Data encryption, as discussed earlier, plays a crucial role in data security. However, it also contributes to data compliance by ensuring that sensitive information is protected in line with regulatory requirements. By encrypting data at rest and in transit, organizations can demonstrate compliance with data protection regulations such as the General Data Protection Regulation (GDPR) or the Health Insurance Portability and Accountability Act (HIPAA). Encryption provides an additional layer of security and helps organizations avoid potential legal consequences associated with data breaches.

Audit Trails: Maintaining Data Accountability

Audit trails help organizations maintain data accountability by tracking and logging activities related to data servers. By recording data access, modifications, and system changes, organizations can demonstrate compliance with regulatory requirements and ensure transparency in data handling. Audit trails provide a historical record of data server activities, enabling organizations to identify any unauthorized access attempts or suspicious activities. Implementing robust logging mechanisms and regularly reviewing audit trails help maintain data integrity and facilitate regulatory compliance.

Data Governance Frameworks: Establishing Policies and Procedures

Data governance frameworks provide a structured approach to managing and protecting data. By establishing policies and procedures for data handling, organizations can ensure compliance with regulatory requirements and mitigate legal risks. Data governance frameworks define roles and responsibilities, data classification and handling guidelines, and data retention and deletion policies. These frameworks also encompass data privacy and consent policies, ensuring that data servers adhere to applicable privacy laws. Implementing a robust data governance framework helps organizations maintain regulatory compliance and instill trust among stakeholders.

Scalability and Elasticity: Adapting to Changing Demands

As businesses grow, data servers need to scale and adapt to changing demands quickly. Scalability and elasticity challenges must be addressed to ensure that data servers can handle increased workloads efficiently.

Auto-Scaling: Dynamic Resource Provisioning

Auto-scaling enables data servers to automatically adjust resources based on workload demands. By monitoring server performance metrics, organizations can trigger auto-scaling mechanisms to provision additional resources when demand increases and release resources when demand decreases. This dynamicresource provisioning ensures that data servers can handle fluctuating workloads without manual intervention. Auto-scaling can be implemented at various levels, including virtual machine scaling, container scaling, or even application-level scaling, depending on the specific infrastructure and requirements.

Distributed Computing: Harnessing the Power of Networks

Distributed computing enables organizations to leverage the power of interconnected networks to handle large-scale data processing tasks efficiently. By distributing workloads across multiple servers or nodes, organizations can achieve faster processing times and improved scalability. Distributed computing frameworks, such as Apache Hadoop or Apache Spark, provide the infrastructure and tools necessary to distribute and manage data processing tasks across a network of servers. This approach allows data servers to handle massive data sets and perform complex computations in parallel, providing enhanced scalability and performance.

Containerization: Lightweight and Scalable Deployment

Containerization technology, exemplified by platforms like Docker and Kubernetes, offers a lightweight and scalable approach to deploying and managing applications. Containers encapsulate applications and their dependencies, allowing them to run consistently across different environments. By leveraging containerization, organizations can easily deploy and scale applications on data servers without the need for complex configuration or resource provisioning. Containers offer flexibility and portability, enabling organizations to scale their applications on-demand and seamlessly migrate them across different servers or cloud environments.

Data Integration and Interoperability: Facilitating Seamless Data Exchange

Data servers often need to integrate with various systems and applications, which can pose challenges in terms of compatibility and data interoperability. Organizations must address these challenges to ensure seamless data exchange and efficient data integration.

Data Mapping: Bridging Data Formats and Structures

Data mapping is a process that involves matching and transforming data from one format or structure to another. Organizations must employ data mapping techniques to bridge the gap between different systems’ data formats and ensure smooth data exchange. By defining mappings and transformations, organizations can facilitate interoperability and enable data servers to efficiently process and exchange data with different applications and systems.

API Integration: Enabling System Communication

Application Programming Interfaces (APIs) play a crucial role in facilitating system communication and data exchange. By implementing APIs, organizations can establish standardized interfaces that enable data servers to interact with external systems or applications. API integration allows for seamless data transfer and facilitates real-time communication between data servers and other systems. Organizations can leverage APIs to integrate data servers with various applications, such as CRM systems, ERP systems, or analytics platforms, enabling efficient data exchange and enabling data-driven decision-making.

Middleware Solutions: Simplifying Integration Complexity

Middleware solutions provide a layer of software that simplifies integration complexity between different systems and applications. These solutions act as intermediaries, facilitating communication and data exchange between data servers and external systems. By leveraging middleware, organizations can abstract the intricacies of integration and ensure that data servers can efficiently interact with diverse systems. Middleware solutions often offer features such as data transformation, message queuing, and protocol translation, enabling seamless data integration and interoperability.

Monitoring and Performance Management: Ensuring Optimal Server Performance

Continuous monitoring and performance management are crucial for maintaining optimal server performance and identifying potential issues. Organizations must address the challenges associated with server monitoring and employ effective strategies to ensure the smooth operation of data servers.

Performance Analytics: Gaining Insights into Server Performance

Performance analytics involves collecting and analyzing data related to server performance metrics. By monitoring key performance indicators (KPIs) such as CPU usage, memory utilization, and network throughput, organizations can gain insights into data server performance and identify potential bottlenecks or areas for improvement. Performance analytics tools provide visualizations and reports that enable administrators to make data-driven decisions and optimize server performance.

Log Analysis: Identifying Anomalies and Issues

Log analysis involves examining logs generated by data servers to identify anomalies, errors, or security incidents. By analyzing log data, organizations can gain visibility into server activities and detect any unusual patterns or events that may indicate performance issues or security breaches. Log analysis tools employ various techniques, such as log aggregation, correlation, and anomaly detection, to identify potential issues and proactively address them.

Anomaly Detection: Early Warning for Performance Issues

Anomaly detection involves identifying deviations from normal server behavior or performance metrics. By utilizing machine learning algorithms and statistical analysis, organizations can establish baseline patterns and detect anomalies that may indicate performance issues or potential failures. Anomaly detection systems can provide early warnings, allowing administrators to take proactive measures to mitigate the impact of performance issues and ensure optimal data server operation.

Cost Optimization and Resource Efficiency: Maximizing ROI

Data servers can be a significant expense for organizations. It is essential to address the challenges associated with cost optimization and resource efficiency to minimize operational costs while maximizing the return on investment (ROI).

Server Consolidation: Maximizing Resource Utilization

Server consolidation involves combining multiple physical servers into a single server or a smaller number of servers. By consolidating servers, organizations can maximize resource utilization and reduce hardware and operational costs. Virtualization technology plays a crucial role in server consolidation, enabling multiple virtual servers to run on a single physical server, reducing the need for additional hardware and associated expenses.

Workload Balancing: Optimizing Resource Allocation

Workload balancing, as discussed earlier, is not only essential for performance optimization but also for resource efficiency. By efficiently distributing workloads across data servers, organizations can ensure that each server operates within its capacity, minimizing wasted resources. Workload balancing ensures that resources are allocated based on demand and prevents overprovisioning or underutilization, optimizing resource efficiency and reducing unnecessary costs.

Energy-Efficient Hardware: Reducing Power Consumption

Energy efficiency is not only crucial for environmental sustainability but also for cost optimization. By upgrading to energy-efficient hardware components, organizations can reduce power consumption and lower operational costs. Energy-efficient processors, memory modules, and power supplies, along with technologies such as solid-state drives (SSDs) and efficient cooling systems, contribute to significant energy savings. By evaluating the energy efficiency of hardware components and selecting those that meet performance requirements while consuming less power, organizations can achieve cost savings in the long run.

Optimized Workloads: Rightsizing Resources

Rightsizing workloads involves matching the resources allocated to data servers with their actual requirements. By analyzing workload patterns and resource usage, organizations can identify underutilized or overprovisioned servers and adjust resource allocations accordingly. Rightsizing workloads helps ensure that resources are allocated optimally, minimizing unnecessary costs associated with overprovisioning while avoiding performance issues caused by underprovisioning.

In conclusion, data servers face numerous challenges in today’s data-driven world. From scalability and security to performance management and compliance, each hurdle requires careful consideration and implementation of appropriate strategies. By addressing these challenges head-on, organizations can ensure efficient data management, enhance user experiences, and stay ahead in the fast-paced digital landscape.