Demystifying Data Server Jargon

Are you tired of feeling lost in a sea of technical jargon when it comes to data servers? Don’t worry, you’re not alone. The world of data servers can be incredibly complex and confusing, with a seemingly endless array of acronyms and terminology to navigate. But fear not, because in this blog article, we will demystify the often bewildering world of data server jargon.

In this comprehensive guide, we will break down the most common terms and concepts related to data servers, making them easy to understand and digest. Whether you’re a tech-savvy professional or a newcomer to the world of data servers, this article is designed to provide you with the knowledge and understanding you need to confidently navigate this complex landscape.

What is a Data Server?

A data server is a powerful computer system that is specifically designed to manage, store, and retrieve data. It acts as a central hub for storing and processing large amounts of information, making it accessible to other devices or users on a network. Data servers play a crucial role in various industries and organizations, enabling efficient and secure data management.

At its core, a data server consists of both hardware and software components. The hardware includes components such as CPUs (Central Processing Units), RAM (Random Access Memory), storage devices, and network interfaces. These components work together to handle data processing, storage, and communication tasks. On the other hand, the software aspect of a data server includes the operating system, database management system, and other software applications that facilitate data management and processing.

The Importance of Data Servers

Data servers are essential for businesses and organizations that deal with large volumes of data. They provide a centralized platform for storing and managing critical information, ensuring easy access, efficient processing, and reliable backup. Data servers also enable data sharing and collaboration among users within a network, facilitating seamless communication and streamlined workflows.

Furthermore, data servers offer enhanced data security and protection. With robust security measures in place, such as encryption, firewalls, and access controls, data servers safeguard sensitive information from unauthorized access, theft, or data breaches. This makes them an integral part of ensuring data integrity and compliance with privacy regulations.

The Components of a Data Server

A data server comprises several key components that work together to perform various tasks. These components include:

1. CPUs (Central Processing Units):

The CPU is the brain of the data server. It executes instructions and performs calculations, enabling data processing and manipulation. The performance of the CPU greatly impacts the overall speed and efficiency of the server.

2. RAM (Random Access Memory):

RAM is a type of volatile memory that provides temporary storage for data that the CPU is actively working on. It allows for quick access to data, significantly improving processing speed. The amount of RAM in a server determines its multitasking capabilities and the amount of data it can handle simultaneously.

3. Storage Devices:

Data servers utilize various storage devices to store vast amounts of data. These may include hard disk drives (HDDs), solid-state drives (SSDs), or even advanced storage technologies like RAID (Redundant Array of Independent Disks) for improved data redundancy and performance.

4. Network Interfaces:

Network interfaces allow the data server to connect to a network, enabling data transfer and communication with other devices. These interfaces can be wired (e.g., Ethernet) or wireless (e.g., Wi-Fi), depending on the network infrastructure and requirements.

5. Operating System:

The operating system (OS) is the software that manages the server’s hardware resources and provides a platform for running applications. Common server operating systems include Windows Server, Linux distributions like Ubuntu or CentOS, and UNIX-based systems.

6. Database Management System (DBMS):

A DBMS is a software application that allows for efficient storage, organization, and retrieval of data from databases. It provides a structured approach to managing data and enables users or applications to interact with the stored information. Popular DBMS options include MySQL, Oracle, Microsoft SQL Server, and PostgreSQL.

7. Additional Software Applications:

Data servers often host various software applications that extend their functionality. These applications may include web servers (e.g., Apache or Nginx), email servers (e.g., Microsoft Exchange or Postfix), or specialized server software for specific purposes, such as content management systems (CMS) or customer relationship management (CRM) systems.

Understanding Server Hardware

In this section, we will delve into the hardware aspects of a data server. We will explore the different components that make up a server, such as CPUs, RAM, and storage devices. This section will provide a comprehensive understanding of server hardware and its impact on server performance.

The Role of CPUs in Data Servers

The CPU, or the central processing unit, is the primary component responsible for executing instructions and performing calculations in a data server. It acts as the brain of the server, handling all the computational tasks required for data processing and manipulation. CPUs vary in their speed, architecture, and the number of cores they possess, which directly impacts the server’s processing power and performance.

When it comes to data servers, CPUs with multiple cores are highly desirable. A core is like an independent processing unit within a CPU, capable of executing instructions simultaneously. Servers with multiple cores can handle multiple tasks concurrently, improving overall performance and multitasking capabilities. Furthermore, CPUs with higher clock speeds can process instructions faster, resulting in quicker data processing and response times.

The Impact of RAM on Server Performance

Random Access Memory (RAM) plays a crucial role in a data server’s performance. It provides temporary storage for data that the CPU is actively working on, allowing for quick access and manipulation. The amount of RAM in a server directly affects its multitasking capabilities and the amount of data it can handle simultaneously.

When a server has insufficient RAM to accommodate the data being processed, it may resort to using the hard disk drive (HDD) as virtual memory, known as paging or swapping. This process is significantly slower than accessing data from RAM, leading to reduced performance. To avoid such bottlenecks, servers should have an adequate amount of RAM to handle the demands of the applications and data being processed.

Server administrators must consider the server’s workload and the memory requirements of the applications running on it when determining the appropriate amount of RAM. Memory-intensive applications, such as databases or virtualization software, may require larger amounts of RAM to ensure smooth operation and prevent performance degradation.

Storage Devices: Hard Disk Drives (HDDs) vs. Solid-State Drives (SSDs)

Data servers employ various storage devices to store and retrieve vast amounts of data. Traditionally, hard disk drives (HDDs) have been the go-to storage solution for servers. However, solid-state drives (SSDs) have gained popularity in recent years due to their superior performance and reliability.

HDDs utilize spinning magnetic disks and mechanical read/write heads to store and retrieve data. They offer large storage capacities at relatively lower costs, making them suitable for applications that require extensive storage, such as archival or backup purposes. However, HDDs are limited by their mechanical nature, resulting in slower data access speeds and higher latency compared to SSDs.

In contrast, SSDs use flash memory technology, which allows for faster data access and transfer speeds. With no moving parts, SSDs offer improved reliability, lower power consumption, and reduced noise levels. These characteristics make SSDs ideal for applications that require high-performance storage, such as databases, web servers, or virtualization environments.

When choosing between HDDs and SSDs for a data server, administrators must consider the specific requirements of the applications and the budget constraints. A combination of both storage technologies, where HDDs are used for bulk storage and SSDs for frequently accessed data or critical applications, can provide an optimal balance between performance and cost.

Network Interfaces: Wired and Wireless Connectivity

Network interfaces play a vital role in data servers, allowing them to connect to a network and communicate with other devices. Depending on the server’s requirements and the network infrastructure, data servers can utilize wired or wireless network interfaces.

Wired Network Interfaces:

Ethernet is the most commonly used wired network interface in data servers. It provides reliable and high-speed data transfer capabilities over copper or fiber optic cables. Ethernet interfaces typically support various speeds, such as 1 Gigabit Ethernet (GbE), 10 GbE, or even higher speeds like 40 GbE or 100 GbE, enabling fast and efficient communication between servers, switches, and other network devices.

Wired network interfaces offer advantages such as greater stability, lower latency, and higher bandwidth compared to wireless connections. They are particularly suitable for data-intensive applications, large-scale server clusters, or environments where a stable and secure connection is essential.

Wireless Network Interfaces:

While wireless connectivity is not as prevalent in data servers as wired connections, there are situations where wireless network interfaces may be beneficial. Wireless interfaces, such as Wi-Fi, provide flexibility and convenience, allowing servers to be placed in locations without easy access to a wired network infrastructure.

Wireless interfaces are often used in environments where mobility is essential, suchas data centers with server racks that need to be moved or reconfigured frequently. Wireless network interfaces can also be useful for remote management and monitoring of data servers, allowing administrators to access and control servers from a distance without the need for physical connectivity.

However, it’s important to note that wireless connections may introduce potential security risks and performance limitations compared to wired connections. Wireless signals can be subject to interference, signal degradation, and unauthorized access. Therefore, when utilizing wireless network interfaces in data servers, proper security measures, such as strong encryption and access controls, should be implemented to ensure data integrity and prevent unauthorized access.

Exploring Server Operating Systems

In this section, we will discuss the different operating systems used in data servers. We will explore popular server operating systems such as Windows Server, Linux, and UNIX. This section will highlight the key features and functionalities of each operating system.

Windows Server

Windows Server is a widely used server operating system developed by Microsoft. It offers a range of features and functionalities that make it suitable for various server applications and environments. Windows Server provides a user-friendly interface and seamless integration with other Microsoft products, making it a popular choice for organizations that heavily rely on Microsoft technologies.

One of the key advantages of Windows Server is its extensive support for a wide range of applications and hardware devices. Microsoft maintains a vast ecosystem of software developers and hardware manufacturers, ensuring compatibility and ease of integration with third-party applications and devices. Windows Server also offers robust security features, such as Active Directory for centralized user management and access control, as well as built-in firewall and encryption capabilities.

Additionally, Windows Server provides powerful tools and services for web hosting, virtualization, and cloud computing. It offers Internet Information Services (IIS) for hosting websites and web applications, Hyper-V for virtualization, and integration with Microsoft Azure for scalable cloud solutions. These features make Windows Server a suitable choice for organizations that require a comprehensive and integrated server platform.

Linux

Linux is an open-source operating system that has gained significant popularity in the server market. It offers a high degree of flexibility, stability, and security, making it a preferred choice for many organizations, ranging from small businesses to large enterprises.

One of the main advantages of Linux is its open-source nature, which means that the source code is freely available and can be customized and modified by the community. This allows for continuous improvements, bug fixes, and the development of a vast array of software applications and tools. Linux also benefits from a large community of developers and users who contribute to its development and provide support through forums and documentation.

Linux distributions, such as Ubuntu, CentOS, and Debian, are tailored versions of the Linux operating system that come bundled with additional software packages and tools. These distributions often include server-specific features and optimizations, making them well-suited for data server environments. Linux is known for its stability and reliability, with many servers running for extended periods without the need for reboots or interruptions.

Linux also offers excellent support for server technologies, such as web servers (e.g., Apache or Nginx), database servers (e.g., MySQL or PostgreSQL), and containerization platforms (e.g., Docker or Kubernetes). Its compatibility with a wide range of hardware architectures and its ability to operate efficiently on both high-end servers and low-power devices make Linux a versatile and cost-effective choice for data servers.

UNIX

UNIX is one of the oldest and most influential operating systems in the history of computing. It served as the foundation for many modern operating systems, including Linux. UNIX is known for its stability, scalability, and security, making it a popular choice for mission-critical server environments.

UNIX-based operating systems, such as FreeBSD, OpenBSD, and Solaris, offer robust features for data servers. These operating systems provide advanced security mechanisms, such as file system permissions, access control lists, and auditing capabilities, to ensure data integrity and protection. They also offer excellent support for networking protocols and services, making them suitable for server applications that require high-performance networking.

UNIX-based operating systems are often used in enterprise-level server environments that demand high availability, reliability, and scalability. They provide extensive support for clustering and failover mechanisms, allowing multiple servers to work together and seamlessly handle increasing workloads or recover from system failures. UNIX’s reputation for stability and its long track record in enterprise computing make it a reliable choice for data servers in demanding environments.

The Role of Databases in Data Servers

In this section, we will explain the role of databases in data servers. We will explore different types of databases, such as relational databases and NoSQL databases, and discuss their advantages and use cases. This section will provide a deeper understanding of how data is structured and managed within a server.

Relational Databases

Relational databases are the most widely used type of database in data server environments. They organize and store data in tables with rows and columns, where each row represents a record, and each column represents a specific attribute or field of the data. Relational databases follow a structured approach, as they require predefined schemas that define the structure, relationships, and constraints of the data.

One of the key advantages of relational databases is their ability to establish relationships between tables, enabling efficient data retrieval and manipulation. These relationships are defined through keys, such as primary keys and foreign keys, ensuring data integrity and enforcing referential integrity constraints. Relational databases also provide powerful query languages, such as SQL (Structured Query Language), which enables users to retrieve, filter, and aggregate data using intuitive and standardized syntax.

Relational databases are well-suited for applications that require complex data relationships, transactional integrity, and ACID (Atomicity, Consistency, Isolation, Durability) compliance. They are commonly used in a wide range of applications, including e-commerce platforms, content management systems, financial systems, and customer relationship management (CRM) software.

NoSQL Databases

NoSQL (Not Only SQL) databases have gained popularity in recent years, particularly in scenarios where traditional relational databases may not be the most suitable solution. NoSQL databases provide a flexible and scalable approach to data storage and retrieval, focusing on high-performance and horizontal scalability.

NoSQL databases depart from the structured nature of relational databases and offer various data models. Some common types of NoSQL databases include:

1. Document Databases:

Document databases store data in flexible and self-describing documents, typically using formats like JSON or XML. Each document can have its own structure, allowing for dynamic schemas. Document databases excel in scenarios where data is unstructured or semi-structured, such as content management systems, social media platforms, or e-commerce product catalogs.

2. Key-Value Stores:

Key-value stores are simple databases that store data as key-value pairs. They offer fast data retrieval based on the key and are well-suited for high-speed caching, session management, and storing metadata. Key-value stores are commonly used in distributed systems and applications that require high throughput and low latency.

3. Column-Family Stores:

Column-family stores organize data in column families, which consist of rows with multiple columns. Each column can store multiple versions or timestamps of data, allowing for efficient storage and retrieval of large amounts of data. Column-family stores are often used in applications that require storing and analyzing vast amounts of data, such as big data analytics or time-series data.

4. Graph Databases:

Graph databases are designed to represent and store data in the form of nodes and edges, allowing for efficient management of complex relationships between data entities. They excel in scenarios where relationships between data elements are crucial, such as social networks, recommendation systems, or network analysis.

NoSQL databases provide scalability and performance advantages by distributing data across multiple servers, enabling horizontal scaling and fault tolerance. They are particularly suitable for applications that require handling large volumes of data, high concurrency, or rapid data growth. However, it’s important to note that NoSQL databases may sacrifice certain features of relational databases, such as ACID compliance or complex data relationships, in favor of scalability and performance.

Network Protocols and Data Transfer

In this section, we will demystify network protocols used for data transfer in servers. We will explain common protocols like TCP/IP, HTTP, and FTP, and discuss their roles in facilitating data communication between servers and clients. This section will shed light on the underlying mechanisms that enable seamless data transfer.

TCP/IP

TCP/IP (Transmission Control Protocol/Internet Protocol) is the foundation of modern networking and the protocol suite used for communication over the internet. It provides a reliable and connection-oriented communication mechanism between devices on a network.

TCP/IP operates at the transport layer of the network protocol stack, handling the segmentation, reassembly, and reliable delivery of data packets. It ensures that data sent from one device is correctly received by the destination device, even in the presence of network congestion, errors, or packet loss.

TCP/IP utilizes IP addresses to identify devices on a network and ports to identify specific services or applications running on those devices. When data is sent over TCP/IP, it is divided into smaller packets and encapsulated with source and destination IP addresses and port numbers. These packets are then transmitted over the network and reassembled at the destination device.

TCP, the transport protocol within TCP/IP, provides features such as flow control, congestion control, and reliabledelivery of data. It establishes a connection between the sending and receiving devices, ensuring that data is delivered in the correct order and without loss or corruption. TCP guarantees the reliability of data transmission by employing acknowledgments, retransmissions, and error-checking mechanisms.

IP, on the other hand, is responsible for addressing and routing packets across networks. It assigns unique IP addresses to devices and determines the best path for data packets to reach their destination. IP ensures that packets are properly routed and delivered to the correct device based on its IP address.

TCP/IP is the backbone of modern internet communication and is used by a wide range of applications and services. It enables seamless data transfer between servers and clients, allowing for reliable and efficient communication across networks.

HTTP

HTTP (Hypertext Transfer Protocol) is a protocol used for transmitting data over the internet. It is the foundation of the World Wide Web and is responsible for fetching and displaying web pages in web browsers. HTTP operates at the application layer of the network protocol stack.

HTTP follows a client-server model, where a client (typically a web browser) sends a request to a web server, and the server responds with the requested data. The client sends an HTTP request message, which includes the desired action (e.g., GET, POST, PUT) and the URL of the resource it wants to access. The server processes the request and sends back an HTTP response message, which contains the requested data or an error message if the request cannot be fulfilled.

HTTP uses a stateless, request-response mechanism, meaning that each request from the client is treated independently, without any knowledge of previous requests. To maintain state and enable more complex interactions, web applications often use cookies or session management techniques.

Over the years, HTTP has evolved to include additional features and security enhancements. For example, HTTP/1.1 introduced persistent connections, allowing multiple requests and responses to be sent over a single TCP connection, reducing the overhead of establishing a new connection for each request. HTTPS (HTTP Secure) employs encryption through SSL/TLS protocols to ensure secure communication between clients and servers, protecting sensitive data from eavesdropping or tampering.

FTP

FTP (File Transfer Protocol) is a protocol specifically designed for transferring files between computers on a network. It provides a standardized way to upload, download, and manage files on remote servers. FTP operates at the application layer of the network protocol stack.

FTP follows a client-server model similar to HTTP. A client initiates a connection with an FTP server and authenticates itself using a username and password. Once authenticated, the client can perform various file operations, such as uploading files to the server, downloading files from the server, renaming or deleting files, and creating or managing directories.

FTP uses separate control and data connections for communication. The control connection handles commands and responses between the client and server, while the data connection is established specifically for transferring file data. FTP supports both active and passive modes for data transfer, depending on the network configuration and security requirements.

While FTP is a reliable and widely used protocol for file transfer, it lacks built-in encryption, making it susceptible to eavesdropping or data interception. To address this security concern, FTP over SSL/TLS (FTPS) and SSH File Transfer Protocol (SFTP) have been developed, providing secure alternatives for file transfer over encrypted connections.

SMTP and POP/IMAP

SMTP (Simple Mail Transfer Protocol) and POP/IMAP (Post Office Protocol/Internet Message Access Protocol) are protocols used for email communication. Together, they enable the sending, receiving, and retrieval of email messages between mail servers and clients.

SMTP is responsible for sending email messages from the sender’s mail client to the recipient’s mail server. It defines the rules and procedures for transferring email across the internet. SMTP uses a store-and-forward mechanism, where email messages are temporarily stored on intermediate mail servers and forwarded to the recipient’s server when it becomes available.

POP and IMAP, on the other hand, are used by mail clients to retrieve email messages from a mail server. POP (POP3) is a simple protocol that allows clients to download email messages from the server to their local devices. POP3 typically removes the messages from the server after they are downloaded, making it suitable for single-device access.

IMAP (IMAP4), on the other hand, provides a more advanced and feature-rich email retrieval protocol. IMAP allows clients to access email messages stored on the server, manage folders and mailboxes, and synchronize changes across multiple devices. Unlike POP, IMAP keeps the messages on the server, allowing users to access and manage their email from different devices.

Ngadsen test2

SMTP, POP, and IMAP work together to enable seamless email communication and retrieval. SMTP ensures the reliable delivery of email messages between servers, while POP and IMAP provide the means for users to access and manage their email messages from different devices and locations.

Scalability and Load Balancing

This section will focus on the concepts of scalability and load balancing in data servers. We will explain how servers scale to handle increasing workloads and distribute traffic effectively. This section will highlight the importance of these concepts in ensuring optimal server performance.

Scalability in Data Servers

Scalability is the ability of a data server to handle increasing workloads and accommodate growing demands. As the amount of data or the number of users and requests increases, a scalable server can adapt and maintain its performance levels without experiencing bottlenecks or performance degradation.

Data servers can be scaled in two primary ways: vertical scaling and horizontal scaling.

Vertical Scaling:

Vertical scaling, also known as scaling up, involves increasing the resources of a single server to enhance its performance and capacity. This can be achieved by adding more powerful CPUs, increasing the amount of RAM, or upgrading storage devices. Vertical scaling is often limited by the maximum capabilities of the server’s hardware and may reach a point of diminishing returns.

Horizontal Scaling:

Horizontal scaling, also known as scaling out, involves adding more servers to distribute the workload and handle increasing demands. Multiple servers work together as a cluster or a farm, sharing the workload and ensuring redundancy. Horizontal scaling offers greater scalability potential as it allows for virtually unlimited expansion by adding more servers to the cluster as needed.

Horizontal scaling can be achieved by implementing load balancing mechanisms, which distribute incoming requests or network traffic across multiple servers. This ensures that no single server is overwhelmed and that the workload is evenly distributed, allowing for efficient resource utilization.

Load Balancing in Data Servers

Load balancing is a technique used to distribute incoming network traffic across multiple servers or resources to ensure optimal utilization, improved performance, and high availability. Load balancers act as intermediaries between clients and servers, receiving incoming requests and redirecting them to the appropriate server based on predefined algorithms or criteria.

Load balancers can be implemented at different layers of the network stack, such as the application layer, transport layer, or network layer. Application-layer load balancers, often referred to as reverse proxies, operate at the highest layer and can make intelligent routing decisions based on application-specific requirements.

Load balancers employ various algorithms to determine how incoming requests are distributed among the available servers. Some commonly used load balancing algorithms include:

1. Round Robin:

The round-robin algorithm evenly distributes incoming requests among the available servers in a cyclic manner. Each server takes turns handling a request, ensuring that the workload is distributed fairly across the server cluster.

2. Least Connections:

The least connections algorithm directs incoming requests to the server with the fewest active connections. This ensures that servers with lower loads receive more requests, preventing overload and ensuring efficient utilization of resources.

3. IP Hash:

The IP hash algorithm uses the client’s IP address to determine which server to send the request to. This ensures that requests from the same client are consistently directed to the same server, which can be useful for maintaining session state or handling client-specific data.

Load balancers can also perform health checks on servers to ensure that only healthy servers receive incoming requests. If a server becomes unresponsive or fails, the load balancer can automatically remove it from the server pool, preventing further requests from being sent to the faulty server.

Load balancing is crucial for ensuring high availability and fault tolerance in data server environments. By distributing the workload across multiple servers, load balancing reduces the risk of a single point of failure and allows for seamless scaling to handle increasing traffic or requests.

Redundancy and High Availability

In this section, we will discuss redundancy and high availability in data servers. We will explain how redundant systems and failover mechanisms are implemented to ensure uninterrupted service and minimize downtime. This section will emphasize the importance of redundancy in critical server environments.

Redundant Systems

Redundancy is the practice of duplicating critical components or systems within a data server environment to ensure continued operation and minimize the risk of failure. Redundant systems provide backup or alternative resources that can take over in the event of a failure, allowing for uninterrupted service and increased reliability.

There are various components of a data server that can be made redundant, including:

1. Power Supply:

Redundant power supplies ensure that the server continues to receive power even if one power supply fails. This prevents power outages from causing server downtime and allows for uninterrupted operation.

2. Network Connectivity:

Redundant network connectivity involves having multiple network interfaces or connections to ensure continuous network access. If one network interface fails or a network connection is lost, the server can rely on the redundant interfaces or connections to maintain connectivity.

3. Storage Devices:

Redundant storage devices, such as RAID (Redundant Array of Independent Disks) configurations, provide data redundancy and protection against disk failures. RAID arrays distribute data across multiple disks, allowing for data recovery and uninterrupted operation even if one or more disks fail.

4. Servers and Clusters:

Redundant server configurations involve having multiple servers or server clusters that can take over the workload if one server fails. These configurations often utilize load balancing mechanisms to distribute traffic among the servers and ensure high availability.

Redundant systems are essential in critical server environments where downtime can result in significant financial losses, data loss, or interruption of services. By implementing redundancy, organizations can minimize the risk of system failures and ensure the continuity of their operations.

Failover Mechanisms

Failover mechanisms are processes or systems designed to automatically switch to a backup or standby system when the primary system fails. Failover ensures seamless transition and uninterrupted service in the event of a failure, minimizing downtime and maintaining high availability.

Failover can be implemented at various levels, depending on the specific requirements and architecture of the data server environment. Some common failover mechanisms include:

1. Server Failover:

Server failover involves having redundant servers or server clusters where the backup servers automatically take over the workload if the primary server fails. This can be achieved through load balancing mechanisms that monitor the health of the servers and redirect traffic to the backup servers when necessary.

2. Network Failover:

Network failover mechanisms ensure uninterrupted network connectivity by automatically switching to backup network interfaces or connections if the primary interface or connection fails. This can involve redundant network switches, routers, or network interface cards (NICs) that seamlessly take over the network traffic.

3. Data Replication and Backup:

Data replication and backup mechanisms create redundant copies of data in real-time or at regular intervals. In the event of a failure, the replicated or backed-up data can be used to restore the system and ensure data integrity and availability.

Failover mechanisms are crucial for maintaining high availability and minimizing service disruptions in critical server environments. By automatically switching to backup systems or resources, failover mechanisms ensure that the impact of failures is minimized and that services can continue without interruption.

Security Measures for Data Servers

In this section, we will delve into the various security measures employed in data servers. We will explore concepts such as encryption, firewalls, access control, and data backup strategies. This section will provide insights into best practices for securing data servers against potential threats.

Encryption

Encryption is a fundamental security measure for protecting data on data servers. It involves transforming data into an unreadable format using cryptographic algorithms, making it inaccessible to unauthorized individuals or entities. Encryption ensures that even if data is intercepted or stolen, it remains protected and unusable without the decryption keys.

There are two main types of encryption used in data servers:

1. Data Encryption at Rest:

Data encryption at rest involves encrypting data stored on the server’s storage devices, such as hard disk drives or solid-state drives. This ensures that even if the physical storage devices are stolen or compromised, the data remains encrypted and inaccessible without the appropriate decryption keys.

2. Data Encryption in Transit:

Data encryption in transit involves encrypting data as it travels over networks, ensuring that it cannot be intercepted or read by unauthorized parties. This is particularly important when data is transferred between servers, clients, or other network devices. Secure protocols such as SSL/TLS are commonly used to encrypt data in transit.

By implementing encryption, organizations can protect sensitive data from unauthorized access and meet compliance requirements. It is important to carefully manage and secure encryption keys to ensure that only authorized individuals have access to them.

Firewalls

Firewalls are a critical component of network security for data servers. They act as a barrier between the server and the external network, monitoring and controlling incoming and outgoing network traffic based on predetermined rules and policies. Firewalls help prevent unauthorized access, malicious attacks, and the spread of malware or viruses.

Firewalls can be implemented at different levels, including:

1. Network Firewall:

A network firewall is a hardware or software-based solution that filters and controls network traffic based on predefined rules. It examines incoming and outgoing packets, allowing or blocking traffic based on factors such as IP addresses, port numbers, and protocols. Network firewalls are typically placed at the perimeter of the network to protect the entire server infrastructure.

2. Host-based Firewall:

A host-based firewall is a software-based firewall that runs directly on the server’s operating system. It provides an additional layer of protection by filtering network traffic specific to the server. Host-based firewalls can be more granular in their rule enforcement, allowing administrators to define specific rules for individual servers or applications.

Firewalls play a crucial role in securing data servers by preventing unauthorized access, blocking malicious traffic, and reducing the attack surface. It is essential to regularly update and maintain firewall configurations to ensure they reflect the latest security best practices and address emerging threats.

Access Control

Access control is the practice of managing and controlling user access to data servers and the resources they host. It ensures that only authorized individuals or entities can access and modify data, reducing the risk of unauthorized data manipulation, theft, or leakage.

Access control mechanisms can include:

1. User Authentication:

User authentication verifies the identity of individuals or entities attempting to access the data server. This can involve username/password combinations, biometric authentication, two-factor authentication (2FA), or other multifactor authentication methods. Strong password policies and regular password updates are crucial to preventing unauthorized access.

2. Access Privileges and Permissions:

Access privileges and permissions determine what actions users can perform and what data they can access or modify. By assigning appropriate access levels and permissions to users, organizations can ensure that users have access only to the data and resources necessary for their role. This minimizes the risk of accidental or intentional data breaches.

3. Role-Based Access Control (RBAC):

RBAC is a widely used access control model that assigns permissions to users based on their roles or job functions within the organization. RBAC simplifies access management by grouping users into roles and defining the permissions associated with each role. This allows for more efficient and scalable access control management.

Implementing robust access control measures helps protect data servers from unauthorized access, data breaches, and insider threats. Regular monitoring and auditing of user activities can help identify and mitigate potential security risks.

Data Backup and Recovery

Data backup and recovery strategies are crucial for ensuring data availability and protecting against data loss in the event of hardware failures, disasters, or other unforeseen circumstances. Data backup involves creating copies of data and storing them in a separate location, while data recovery involves restoring data from backups when needed.

Key considerations for data backup and recovery include:

1. Regular and Automated Backups:

Regular and automated backups ensure that data is consistently backed up without manual intervention. Backups can be performed at different intervals, depending on the criticality of the data and the rate of data changes. Incremental backups, where only changes since the last backup are saved, can help reduce backup time and storage requirements.

2. Offsite and Remote Backups:

Storing backups in an offsite or remote location is crucial for protecting against physical disasters or theft. Offsite backups ensure that data can be recovered even if the primary server location becomes inaccessible or compromised. Cloud-based backup solutions offer scalability, flexibility, and redundancy for storing backups in remote locations.

3. Testing and Verification:

Regular testing and verification of backups are essential to ensure the integrity and recoverability of data. Backups should be periodically tested to verify that data can be successfully restored and that the backup process is functioning as expected. This helps identify any issues or errors before they become critical.

By implementing robust data backup and recovery strategies, organizations can minimize the risk of data loss and ensure that critical data can be recovered in a timely manner. Regular testing and verification of backups are crucial to maintain the effectiveness of the backup and recovery processes.

Monitoring and Performance Optimization

This section will focus on monitoring tools and techniques used to optimize the performance of data servers. We will explore server monitoring software, performance metrics, and troubleshooting methodologies. This section will empower readers with the knowledge to identify and resolve performance issues effectively.

Server Monitoring Software

Server monitoring software plays a crucial role in optimizing the performance of data servers by providing insights into server health, resource utilization, and performance metrics. These tools monitor various aspects of server operation, including CPU usage, memory usage, disk I/O, network traffic, and application performance.

Server monitoring software typically offers features such as:

1. Real-time Monitoring:

Real-time monitoring allows administrators to track server performance and resource usage in real-time. This helps identify potentialperformance bottlenecks or issues as they occur, allowing for proactive troubleshooting and optimization.

2. Alerting and Notifications:

Monitoring software can send alerts and notifications to administrators when predefined thresholds or conditions are met. This ensures that critical issues are addressed promptly, minimizing downtime and improving server performance.

3. Historical Data Analysis:

Monitoring tools store historical data and provide analysis capabilities, allowing administrators to identify trends, patterns, and anomalies in server performance. This information can help optimize resource allocation, plan for future capacity needs, and troubleshoot recurring issues.

There are numerous server monitoring solutions available, ranging from open-source options like Nagios and Zabbix to commercial tools like SolarWinds and Dynatrace. The choice of monitoring software depends on the specific requirements of the data server environment, including the level of detail needed, scalability, and integration capabilities.

Performance Metrics

Monitoring the right performance metrics is crucial for optimizing data server performance. By tracking and analyzing relevant metrics, administrators can identify performance bottlenecks, diagnose issues, and make informed decisions to improve server efficiency. Some key performance metrics to monitor include:

1. CPU Utilization:

CPU utilization measures the percentage of time the CPU spends executing tasks. High CPU utilization may indicate a need for additional processing power or optimization of resource-intensive applications.

2. Memory Usage:

Monitoring memory usage helps ensure that the server has sufficient RAM for efficient data processing. High memory usage or frequent memory swaps to disk may indicate the need for additional memory or optimization of memory usage.

3. Disk I/O:

Disk I/O metrics, such as read and write speeds, help identify potential disk bottlenecks that can impact server performance. Monitoring disk I/O can help optimize storage configurations and identify potential hardware issues.

4. Network Traffic:

Monitoring network traffic helps assess the bandwidth utilization and identify potential network bottlenecks. By analyzing network traffic patterns, administrators can optimize network configurations and identify potential security threats.

5. Application Response Time:

Application response time measures the time taken for an application to respond to a user request. Monitoring application response time helps ensure optimal user experience and identify performance issues that may impact application performance.

These are just a few examples of the performance metrics that can be monitored. The choice of metrics depends on the specific requirements of the data server environment and the applications hosted on the server. It is important to establish baseline performance metrics and regularly review and analyze them to identify areas for improvement.

Troubleshooting Methodologies

Troubleshooting methodologies help administrators identify and resolve performance issues in data servers effectively. By following a systematic approach, administrators can isolate the root cause of performance problems and take appropriate actions to optimize server performance. Some common troubleshooting methodologies include:

1. Gather Information:

Start by gathering information about the server’s configuration, current performance metrics, and any recent changes or incidents. This information will provide insights into the server’s baseline performance and help identify any potential triggers for performance issues.

2. Identify Symptoms:

Identify the symptoms of the performance issue, such as slow response times, high CPU usage, or disk bottlenecks. This will help narrow down possible causes and focus troubleshooting efforts on the relevant areas.

3. Analyze Performance Metrics:

Review the collected performance metrics and analyze them to identify any anomalies or patterns that may be contributing to the performance issue. Compare current metrics with baseline data to identify any significant deviations.

4. Isolate the Cause:

Once potential causes have been identified, isolate the root cause of the performance issue. This may involve testing different components, disabling or modifying specific configurations, or analyzing log files for error messages or warnings.

5. Implement Solutions:

Based on the identified root cause, implement appropriate solutions to optimize server performance. This may involve adjusting server configurations, optimizing application code, upgrading hardware components, or reallocating resources based on the analysis and recommendations.

6. Monitor and Validate:

Monitor the server after implementing the solutions to ensure that the performance issue has been resolved. Validate the effectiveness of the solutions by monitoring performance metrics and comparing them to the established baselines.

By following a systematic troubleshooting methodology, administrators can effectively identify and resolve performance issues in data servers. Regular monitoring and proactive troubleshooting can help maintain optimal server performance and ensure a smooth user experience.

Future Trends in Data Servers

In this final section, we will discuss emerging trends and technologies in the world of data servers. We will explore topics such as cloud computing, virtualization, and edge computing, and discuss how these advancements are shaping the future of data servers. This section will provide readers with a glimpse into what lies ahead for data server technology.

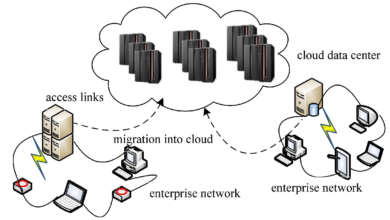

Cloud Computing:

Cloud computing is revolutionizing the way data servers are provisioned, managed, and utilized. With cloud computing, data servers are no longer limited to physical on-premises infrastructure. Instead, servers and resources are provisioned and managed in the cloud, offering scalability, flexibility, and cost efficiency. Cloud computing enables organizations to dynamically scale server resources as needed, reducing the reliance on physical hardware and allowing for more efficient resource utilization.

Virtualization:

Virtualization technology is becoming increasingly prevalent in data server environments. Virtualization allows for the creation of multiple virtual servers or virtual machines (VMs) on a single physical server, enabling better resource allocation and utilization. Virtualization provides benefits such as improved server consolidation, easier server management, and increased flexibility. It enables organizations to optimize their server infrastructure by running multiple operating systems and applications on a single physical server.

Edge Computing:

Edge computing is an emerging paradigm that brings processing and data storage closer to the source of data generation. Instead of relying on centralized data centers, edge computing deploys data servers and resources at the edge of the network, closer to the devices and sensors generating the data. This reduces latency, enhances real-time processing capabilities, and enables faster decision-making. Edge computing is particularly relevant in applications that require low latency, such as Internet of Things (IoT) devices, autonomous vehicles, and real-time analytics.

Artificial Intelligence and Machine Learning:

Artificial intelligence (AI) and machine learning (ML) are transforming data servers by enabling intelligent automation, predictive analytics, and enhanced data processing capabilities. AI and ML algorithms can optimize server performance, automate routine tasks, and provide valuable insights from vast amounts of data. AI and ML are being integrated into server management tools, monitoring software, and security systems to improve efficiency, optimize resource allocation, and enhance server security.

Green Computing:

Green computing, also known as sustainable computing, is an emerging trend in data server technology. With increasing concerns about energy consumption and environmental impact, data servers are being designed and optimized for energy efficiency. This includes using energy-efficient hardware components, implementing power management techniques, and adopting renewable energy sources for server operations. Green computing reduces carbon footprints, lowers operational costs, and aligns data server technology with sustainability goals.

The future of data servers is dynamic and constantly evolving. Cloud computing, virtualization, edge computing, AI, ML, and green computing are just a few of the trends that are shaping the future of data server technology. Keeping abreast of these advancements and leveraging them effectively will be key to maximizing the potential of data servers in the years to come.

In conclusion, this comprehensive guide has aimed to demystify the often perplexing jargon surrounding data servers. By breaking down the various terms and concepts, we hope to have provided readers with a solid foundation to understand and navigate the intricacies of data servers. Whether you’re a seasoned professional or just starting your journey in the world of data servers, this guide is designed to empower you with the knowledge and confidence to tackle any server-related challenge.

Remember, the world of data servers is constantly evolving, so it’s essential to stay updated with the latest advancements and trends. With this newfound understanding of data server jargon, you’ll be well-equipped to adapt and thrive in this ever-changing landscape.