Effective Data Server Monitoring

In today’s digital age, data servers play an indispensable role in the smooth operation of businesses and organizations. As the backbone of data storage and processing, it is crucial to have an effective monitoring system in place to ensure optimal performance and security. In this blog article, we will explore the importance of data server monitoring and provide comprehensive insights into the best practices and tools for effective monitoring.

Monitoring data servers is vital as it allows businesses to proactively identify and address potential issues before they escalate into major problems. By continuously monitoring server performance, businesses can minimize downtime, optimize resource utilization, and enhance overall system reliability. Furthermore, effective monitoring enables organizations to detect and mitigate security threats, safeguarding sensitive data from unauthorized access or breaches.

The Importance of Data Server Monitoring

Effective data server monitoring is at the core of ensuring the uninterrupted operation of critical systems and applications. By monitoring server performance in real-time, businesses can gain valuable insights into the health of their infrastructure, identify bottlenecks, and allocate resources efficiently. Monitoring also enables proactive identification and resolution of potential issues, reducing the risk of system failures that can lead to costly downtime and lost productivity.

Moreover, data server monitoring plays a crucial role in safeguarding sensitive information. By monitoring network traffic and system logs, businesses can detect and respond to security breaches promptly. This helps prevent data loss, unauthorized access, and potential legal and financial consequences. With the increasing prevalence of cyber threats, data server monitoring has become a necessity for organizations of all sizes.

The Benefits of Monitoring

Implementing a robust data server monitoring system offers numerous benefits to businesses:

- Improved Performance: Monitoring server metrics such as CPU usage, memory utilization, disk I/O, network traffic, and response time helps identify performance bottlenecks, enabling businesses to optimize resource allocation and enhance overall system performance.

- Enhanced Reliability: By continuously monitoring server health and proactively addressing potential issues, businesses can significantly reduce the risk of system failures and unplanned downtime, ensuring the availability of critical applications and services.

- Increased Security: Monitoring network traffic and system logs enables businesses to detect and respond swiftly to security breaches and potential vulnerabilities, protecting sensitive data from unauthorized access and potential data breaches.

- Cost Savings: By identifying and resolving performance issues early on, businesses can avoid costly downtime and optimize resource utilization, resulting in significant cost savings in the long run.

The Consequences of Inadequate Monitoring

On the other hand, inadequate data server monitoring can have severe consequences:

- Downtime and Loss of Productivity: Without proper monitoring, businesses may experience unexpected system failures and prolonged downtime, leading to a loss in productivity, dissatisfied customers, and potential revenue loss.

- Data Breaches and Security Vulnerabilities: Ineffective monitoring leaves businesses vulnerable to security breaches, unauthorized access, and data leaks, potentially resulting in damage to their reputation and legal consequences.

- Inefficient Resource Allocation: Without real-time visibility into server performance, businesses may allocate resources inefficiently, leading to underutilization or overutilization of server capacity and increased operational costs.

- Lack of Compliance: In industries with specific data protection regulations, inadequate monitoring may result in non-compliance, leading to fines, penalties, and reputational damage.

Key Metrics to Monitor

Monitoring the right metrics is crucial for gaining insights into the performance and health of data servers. By tracking key performance indicators (KPIs), businesses can identify trends, anomalies, and potential issues. Here are some essential metrics to monitor:

CPU Usage

The CPU (Central Processing Unit) is the brain of a server, responsible for executing instructions and processing data. Monitoring CPU usage helps identify whether the server is underutilized or overburdened. High CPU usage can indicate resource contention, poorly optimized applications, or the need for additional processing power. On the other hand, low CPU usage might suggest underutilization or the potential for workload consolidation.

Memory Utilization

Memory, or RAM (Random Access Memory), is critical for storing and accessing data that the server needs to process. Monitoring memory utilization helps identify potential memory leaks, inadequate memory allocation, or excessive memory usage. High memory usage can lead to performance degradation or even system crashes, while low memory utilization may indicate opportunities for resource optimization.

Disk I/O

Disk Input/Output (I/O) refers to the speed at which the server can read and write data to storage devices. Monitoring disk I/O helps identify potential performance bottlenecks, such as slow disk response times or high disk utilization. By analyzing disk I/O metrics, businesses can optimize storage configurations, identify the need for faster storage devices, or implement caching mechanisms to improve overall system performance.

Network Traffic

Monitoring network traffic provides insights into the volume of data transmitted and received by the server. By tracking network traffic metrics, businesses can identify potential network congestion, bandwidth limitations, or abnormal data transfer patterns. This information helps optimize network infrastructure, plan for future capacity needs, and detect potential security threats or unauthorized access attempts.

Response Time

Response time measures the time it takes for the server to respond to a request. Monitoring response time helps businesses ensure that applications and services are performing within acceptable limits. By tracking response time, businesses can identify potential performance issues, bottlenecks, or network latency problems. This information can guide optimization efforts, enhance user experience, and prevent customer dissatisfaction.

Monitoring Tools and Solutions

A wide range of monitoring tools and solutions are available to help businesses effectively monitor their data servers. These tools offer various features and capabilities to track server performance, generate alerts, and provide valuable insights. Here are some popular options:

Open-Source Monitoring Tools

Open-source monitoring tools provide businesses with cost-effective solutions that can be customized to their specific needs. These tools often have active communities, regular updates, and a wide range of plugins and integrations. Some popular open-source monitoring tools include:

- Prometheus

- Nagios

- Zabbix

- Icinga

- Grafana

Open-source tools offer flexibility and scalability, allowing businesses to monitor a wide range of metrics and integrate with different technologies. However, they may require more technical expertise for setup and maintenance.

Commercial Monitoring Solutions

Commercial monitoring solutions provide comprehensive features, professional support, and user-friendly interfaces. These solutions often offer out-of-the-box integrations, advanced analytics, and automation capabilities. Popular commercial monitoring solutions include:

- SolarWinds

- Paessler PRTG Network Monitor

- Datadog

- New Relic

- Dynatrace

Commercial solutions provide ease of use and dedicated support, making them suitable for businesses with varying levels of technical expertise. However, they come at a cost, and businesses should consider their budget and specific requirements before investing in these solutions.

Custom Monitoring Solutions

In some cases, businesses may choose to develop custom monitoring solutions tailored to their unique needs. Custom solutions offer the flexibility to monitor specific metrics and integrate with existing systems. However, developing a custom monitoring solution requires significant development resources, expertise, and ongoing maintenance.

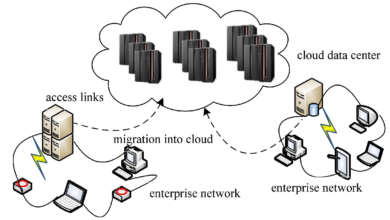

Cloud-Based Monitoring Services

Cloud-based monitoring services offer the advantage of scalability, ease of deployment, and cost-effectiveness. These services allow businesses to monitor their data servers from remote locations, utilize the cloud provider’s infrastructure, and benefit from managed monitoring services. Popular cloud-based monitoring services include:

- Amazon CloudWatch

- Google Cloud Monitoring

- Microsoft Azure Monitor

Cloud-based monitoring services offer a convenient option for businesses leveraging cloud infrastructure, eliminating the need for on-premises monitoring systems. However, organizations should consider the potential impact on data security and compliance when using third-party cloud services.

Setting Up an Effective Monitoring System

Implementing an effective data server monitoring system involves several key steps to ensure comprehensive coverage and reliable insights. Here is a step-by-step guide to setting up a robust monitoring system:

Step 1: Define Monitoring Goals

Start by clearly defining the goals and objectives of your monitoring system. Consider the specific metrics you want to monitor, the desired level of granularity, and the frequency of data collection. Align your monitoring goals with your business objectives and compliance requirements.

Step 2: Select the Right Monitoring Tools

Choose monitoring tools that align with your monitoring goals, budget, and technical capabilities. Evaluate different options based on their features, scalability, ease of use, and support. Consider whether you require open-source tools, commercial solutions, or cloud-based services based on your specific needs.

Step 3: Identify Critical MetricsStep 3: Identify Critical Metrics

Identify the critical metrics that are relevant to your data server performance and security. This may include CPU usage, memory utilization, disk I/O, network traffic, response time, and other server-specific metrics. Consult with your IT team or system administrators to determine which metrics are most important for your specific environment.

Step 4: Configure Monitoring Alerts

Configure monitoring alerts to notify you of any abnormal behavior or thresholds being breached. Set up alerts based on predefined thresholds for each metric, so you can be alerted in real-time when any metric exceeds or falls below the specified values. By setting up appropriate alerts, you can take immediate action to address potential issues and prevent system failures or security breaches.

Step 5: Establish Monitoring Policies

Establish monitoring policies to define how frequently data should be collected, how long it should be retained, and who has access to the monitoring data. Consider compliance requirements and industry best practices when establishing these policies. Ensure that monitoring policies are documented and communicated to all relevant stakeholders to ensure consistency and adherence to monitoring standards.

Step 6: Implement Performance Baselines

Implement performance baselines to establish normal operating ranges for each metric. By collecting and analyzing historical data, you can define performance baselines and set thresholds for deviations from the norm. This allows you to differentiate between expected variations and abnormal behavior, helping you prioritize and respond to critical issues more effectively.

Step 7: Integrate with Incident Management

Integrate your data server monitoring system with your incident management process. This ensures that monitoring alerts are automatically escalated and captured as incidents, enabling efficient incident response and resolution. By integrating monitoring and incident management, you can streamline communication, track incident progress, and maintain a comprehensive incident history for future reference.

Step 8: Regularly Review and Fine-Tune

Regularly review and fine-tune your monitoring system to ensure its effectiveness and relevance. Monitor the performance of your monitoring tools, evaluate the accuracy of alerts, and make adjustments as needed. Additionally, periodically review your monitoring policies and metrics to ensure they align with changing business requirements and technological advancements.

Real-Time Monitoring vs. Periodic Monitoring

When it comes to data server monitoring, businesses have the option to choose between real-time monitoring and periodic monitoring, depending on their specific needs and available resources. Each approach has its advantages and limitations, and organizations should consider their requirements before deciding which method to implement.

Real-Time Monitoring

Real-time monitoring involves continuously collecting and analyzing data from data servers, providing immediate insights into server health and performance. Real-time monitoring allows businesses to detect and respond to issues as they occur, minimizing downtime and maximizing system availability.

Real-time monitoring is particularly valuable for mission-critical systems that cannot afford any interruptions. By monitoring server metrics in real-time, businesses can proactively identify potential bottlenecks, performance degradation, or security breaches. Real-time monitoring enables prompt incident response, allowing businesses to address issues before they escalate into major problems.

Periodic Monitoring

Periodic monitoring involves collecting data from data servers at regular intervals, such as every few minutes or hours. This approach is often more resource-friendly and suitable for businesses with limited monitoring capabilities or lower priority systems.

Periodic monitoring allows businesses to gather historical data and identify patterns or trends over time. While it may not provide real-time insights, periodic monitoring can still be valuable for identifying long-term performance issues or resource utilization trends. With periodic monitoring, businesses can still detect anomalies and take corrective actions, albeit with a slight delay.

Choosing the Right Approach

The choice between real-time monitoring and periodic monitoring depends on several factors, including:

- System Criticality: If your data servers host critical applications or services that require uninterrupted operation, real-time monitoring is recommended. It allows for immediate detection and resolution of issues, minimizing downtime and ensuring optimal performance.

- Resource Availability: Real-time monitoring typically requires more resources, such as processing power, storage, and network bandwidth. If resource availability is limited, periodic monitoring may be a more feasible option.

- Cost Considerations: Real-time monitoring solutions, especially commercial ones, may come at a higher cost. Evaluate your budget and cost constraints before deciding on the monitoring approach.

- Compliance Requirements: Some industries or regulatory frameworks may require real-time monitoring for specific systems or data. Ensure you understand the compliance requirements and align your monitoring approach accordingly.

In some cases, businesses may choose to implement a hybrid approach, combining real-time monitoring for critical systems and periodic monitoring for less critical ones. This allows for a balance between resource utilization and system availability.

Ngadsen test2

Automated Incident Response

Automating incident response in data server monitoring can significantly improve efficiency, reduce response times, and minimize the impact of system failures or security incidents. By automating incident response, businesses can streamline their incident management process, ensure consistent actions are taken, and enhance collaboration between teams.

Automated Alert Escalation

Automated alert escalation ensures that critical alerts are promptly escalated to the appropriate personnel or teams. By defining escalation rules based on severity levels or predefined thresholds, businesses can ensure that alerts receive the necessary attention and are addressed in a timely manner. Automated alert escalation helps prevent alerts from being overlooked or delayed due to human error or oversight.

Remediation Workflows

Automating remediation workflows enables businesses to automatically execute predefined actions or scripts in response to specific alerts or incidents. By defining automated workflows, businesses can streamline the incident resolution process, reduce manual intervention, and minimize the time required to resolve issues. Remediation workflows can include actions such as restarting services, reallocating resources, or triggering backups.

Incident Documentation

Automated incident documentation ensures that all relevant information about an incident is captured and recorded for future reference. By automatically generating incident reports, businesses can maintain a comprehensive incident history, track the progress of incident resolution, and facilitate post-incident analysis. Automated incident documentation also helps with compliance requirements and facilitates knowledge sharing within the organization.

Benefits of Automated Incident Response

Implementing automated incident response in data server monitoring offers several benefits:

- Improved Response Time: Automated incident response eliminates manual intervention, reducing response times and ensuring issues are addressed promptly.

- Consistency and Standardization: Automation ensures that predefined actions and workflows are consistently followed, minimizing the risk of human error and ensuring adherence to best practices.

- Efficient Resource Utilization: Automated incident response helps optimize resource allocation by automatically reallocating resources or triggering remediation actions based on predefined rules.

- Enhanced Collaboration: Automation facilitates collaboration between different teams involved in incident resolution, ensuring effective communication and coordination.

- Continuous Improvement: By capturing incident data and generating reports, businesses can analyze trends, identify recurring issues, and implement measures to prevent future incidents.

However, it is essential to regularly review and update automated incident response workflows to ensure their effectiveness and relevance to changing business needs and evolving technology.

Leveraging Machine Learning for Anomaly Detection

Machine learning algorithms can be leveraged in data server monitoring to detect anomalies and predict potential issues before they occur. By analyzing historical data and patterns, machine learning algorithms can identify deviations from normal behavior and provide insights into potential performance or security issues.

Training Data Collection

Machine learning algorithms require training data to learn normal behavior and identify anomalies. Businesses can collect historical data from their data servers, including metrics such as CPU usage, memory utilization, network traffic, and response time. This training data should cover a significant period to capture different operating conditions and variations.

Feature Selection

Feature selection involves identifying the most relevant metrics and variables to train the machine learning algorithm. Not all metrics may contribute equally to anomaly detection, so it is crucial to select the most informative features. Consult with data scientists or machine learning experts to determine the best feature selection approach for your specific monitoring needs.

Algorithm Training and Validation

Once the training data and features are selected, businesses can train machine learning algorithms using supervised or unsupervised learning techniques. Supervised learning requires labeled data, where anomalies are explicitly identified. Unsupervised learning techniques, on the other hand, do not require labeled data and can identify anomalies based on deviations from normal behavior.

After training the algorithms, it is essential to validate their performance using separate validation datasets. This helps assess the accuracy of anomaly detection and fine-tune the algorithms if necessary.

Implementing Anomaly Detection

Once the machine learning algorithms are trained and validated, they can be implemented in the data server monitoring system. The algorithms continuously analyze real-time data from the servers, comparing them to the learned patterns and identifying any deviations that may indicate anomalies or potential issues. When an anomaly is detected, alerts can be generated, and appropriate actions can be taken to address the issue.

Benefits of Machine Learning in Anomaly Detection

Byanalyzing data server metrics using machine learning algorithms for anomaly detection, businesses can benefit in several ways:

- Early Detection: Machine learning algorithms can identify anomalies and potential issues before they manifest as major problems. By detecting anomalies early on, businesses can take proactive measures to prevent system failures, optimize performance, and enhance security.

- Reduced False Positives: Machine learning algorithms can learn and adapt to the specific behavior of data servers, reducing false positive alerts. This helps minimize the time and effort spent investigating and addressing false alarms, allowing IT teams to focus on genuine issues.

- Improved Accuracy: Machine learning algorithms can analyze large volumes of data and identify complex patterns that may not be apparent to traditional monitoring approaches. This results in more accurate anomaly detection and a better understanding of system behavior.

- Predictive Maintenance: By analyzing historical trends and patterns, machine learning algorithms can predict potential issues or performance degradation. This enables businesses to proactively schedule maintenance activities, replace hardware components, or optimize system configurations, preventing disruptions and minimizing downtime.

- Continuous Learning: Machine learning algorithms can continuously learn and adapt to changing system behavior and evolving threat landscapes. As new data is collected, the algorithms can update their models and improve their accuracy over time.

It is important to note that implementing machine learning for anomaly detection in data server monitoring requires expertise in data science and algorithm development. Organizations should consider working with data scientists or leveraging pre-built machine learning solutions specifically designed for anomaly detection in server monitoring.

Data Server Monitoring Best Practices

Implementing best practices for data server monitoring can help ensure the effectiveness and efficiency of the monitoring system. Here are some key best practices to consider:

Regular Backups

Regularly backup your data servers to ensure that critical data is protected and can be restored in case of system failures or data loss. Establish backup schedules and verify the integrity of backups regularly. Consider implementing automated backup solutions to streamline the process and ensure consistency.

Log Analysis

Analyze server logs to gain insights into system behavior, detect security threats, and identify potential issues. Log analysis can help identify patterns, track user activities, and provide valuable information for troubleshooting. Consider utilizing log management tools or log analysis platforms to simplify log analysis and enhance visibility.

Capacity Planning

Perform regular capacity planning to anticipate future resource requirements and avoid performance bottlenecks. By monitoring resource utilization trends and projecting future growth, businesses can proactively allocate resources, upgrade hardware components, or optimize system configurations.

Continuous Improvement

Data server monitoring should be an ongoing process of continuous improvement. Regularly review and analyze monitoring data, identify areas for improvement, and implement measures to enhance performance and security. Solicit feedback from system administrators, IT teams, and end-users to gain insights into potential issues and identify areas for optimization.

Monitoring Redundancy

Consider implementing monitoring redundancies to ensure system availability even in the event of monitoring system failures. This can involve using multiple monitoring tools, redundant alerting mechanisms, or distributing monitoring responsibilities across different teams or locations.

Documentation and Knowledge Sharing

Document your monitoring system setup, configuration, and processes to ensure consistency and facilitate knowledge sharing. This documentation can serve as a reference for future troubleshooting, onboarding new team members, or conducting audits. Regularly update the documentation to reflect any changes or improvements made to the monitoring system.

Training and Skill Development

Invest in training and skill development for your IT teams responsible for data server monitoring. Ensure that they have the necessary knowledge and expertise to effectively use the monitoring tools, interpret monitoring data, and respond to alerts promptly. Encourage continuous learning and staying updated with the latest trends and technologies in data server monitoring.

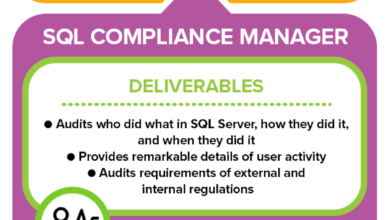

Regular Auditing and Compliance

Regularly audit your data server monitoring system to ensure compliance with relevant regulations and industry standards. This includes reviewing access controls, data privacy measures, and adherence to data protection policies. Engage external auditors if required to validate the effectiveness of your monitoring system.

Cloud-Based Monitoring Solutions

Cloud-based monitoring solutions offer numerous advantages, including scalability, ease of deployment, and cost-effectiveness. By leveraging cloud infrastructure for data server monitoring, businesses can overcome resource limitations, reduce maintenance efforts, and benefit from managed monitoring services. Here are some key benefits of cloud-based monitoring solutions:

Scalability

Cloud-based monitoring solutions allow businesses to scale their monitoring infrastructure as their needs evolve. With cloud providers offering flexible resource allocation, businesses can easily add or remove monitoring capacity based on their requirements. This ensures that the monitoring system can handle increasing data volumes or expanding server infrastructure without performance degradation.

Ease of Deployment

Cloud-based monitoring solutions eliminate the need for on-premises infrastructure and complex setup processes. With cloud providers offering pre-configured monitoring tools, businesses can quickly deploy monitoring agents or integrate with existing cloud services. This reduces the time and effort required for initial setup, allowing businesses to start monitoring their data servers more rapidly.

Cost-Effectiveness

Cloud-based monitoring solutions often follow a pay-as-you-go pricing model, enabling businesses to only pay for the resources they consume. This eliminates the need for upfront hardware investments or long-term commitments. Additionally, cloud-based solutions alleviate the burden of maintenance and upgrades, as these tasks are handled by the cloud provider. This results in cost savings and predictable monitoring expenses.

Managed Monitoring Services

Cloud providers often offer managed monitoring services, providing businesses with access to expert support and specialized monitoring capabilities. Managed services can include features such as automatic software updates, security patching, and 24/7 monitoring support. This allows businesses to focus on their core operations while relying on the cloud provider’s expertise for monitoring infrastructure.

Data Security Considerations

When leveraging cloud-based monitoring solutions, businesses should consider data security and privacy. Ensure that the cloud provider adheres to industry-standard security practices, implements robust access controls, and offers encryption options for data transmission and storage. It is also essential to evaluate data residency requirements and ensure compliance with relevant data protection regulations.

Ensuring Compliance with Data Protection Regulations

Compliance with data protection regulations is crucial for businesses handling sensitive data. When implementing data server monitoring, it is important to align monitoring practices with applicable regulations, such as the General Data Protection Regulation (GDPR) or the Health Insurance Portability and Accountability Act (HIPAA). Here are some key considerations for ensuring compliance:

Data Minimization

Collect and retain only the necessary data for monitoring purposes. Minimize the collection of personal or sensitive data that is not directly related to server performance or security. Ensure that data retention periods align with legal requirements and regularly purge monitoring data that is no longer needed.

Access Controls

Implement strong access controls to limit access to monitoring data to authorized personnel only. Define roles and responsibilities for individuals accessing monitoring systems and grant appropriate permissions based on the principle of least privilege. Regularly review access controls and revoke access when no longer required.

Data Encryption

Encrypt monitoring data both in transit and at rest to protect it from unauthorized access. Utilize secure protocols, such as TLS or SSL, for data transmission between monitoring agents and the monitoring system. Additionally, consider encrypting data stored in monitoring databases or cloud storage to safeguard it from potential breaches.

Audit Trails

Implement audit trails to track and record activities related to the monitoring system. This includes logging access attempts, modifications to monitoring configurations, and any actions performed on monitoring data. Audit trails serve as evidence of compliance and facilitate incident investigation or forensic analysis if required.

Vendor Compliance

Ensure that the monitoring tools or solutions you choose comply with relevant data protection regulations. Review the privacy policies and terms of service of the monitoring vendors to ensure they align with your compliance requirements. Consider engaging with vendors who have undergone third-party audits or certifications related to data protection and security.

Data Breach Response

Have a well-defined data breach response plan in place that outlines the steps to be taken in the event of a security incident or data breach. This plan should include incident notification procedures, communication protocols, and the involvement of relevant stakeholders, such as legal or compliance teams. Regularly test and update the response plan to ensure its effectiveness.

Consult with legal or compliance professionals to ensure that your data server monitoring practices align with applicable regulations and implement necessary measures to protect sensitive data.

In conclusion, effective data server monitoring is vital for ensuring the optimal performance and security of businesses’ critical systems and data. By monitoring key metrics, leveraging automation and machine learning, and following best practices, organizations can proactively detect and address issues, minimize downtime, optimize resource utilization, and protect sensitive information. Whether through real-time monitoring, periodic monitoring, or cloud-based solutions, businesses can build a robust monitoring system that contributes to their overall success in today’s interconnected world.